Technology

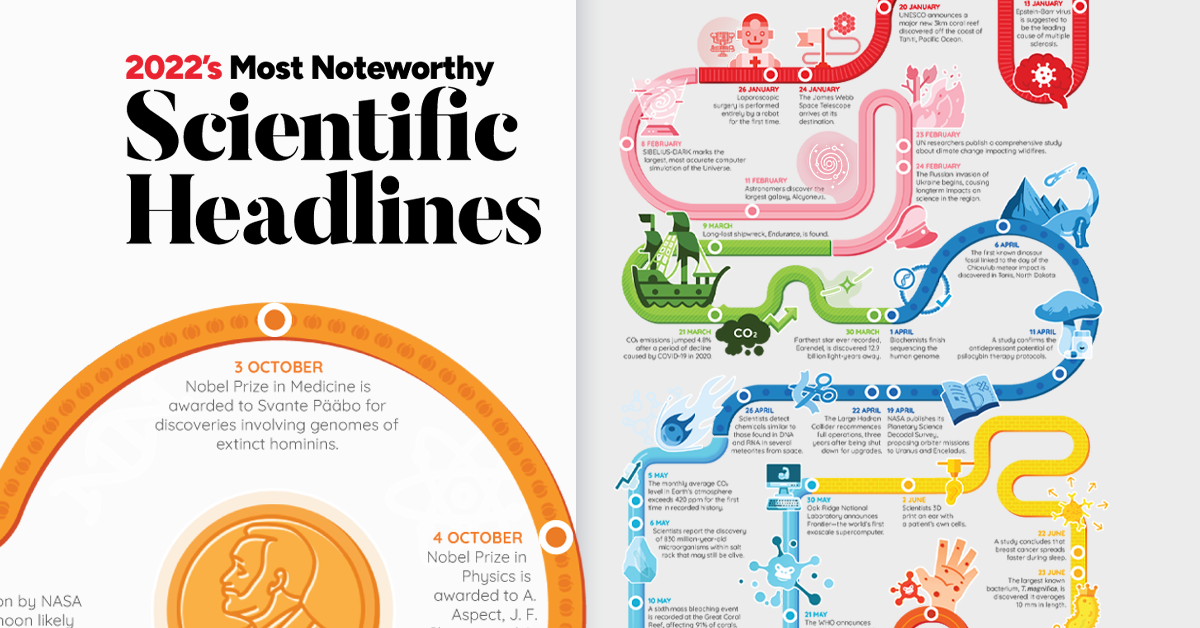

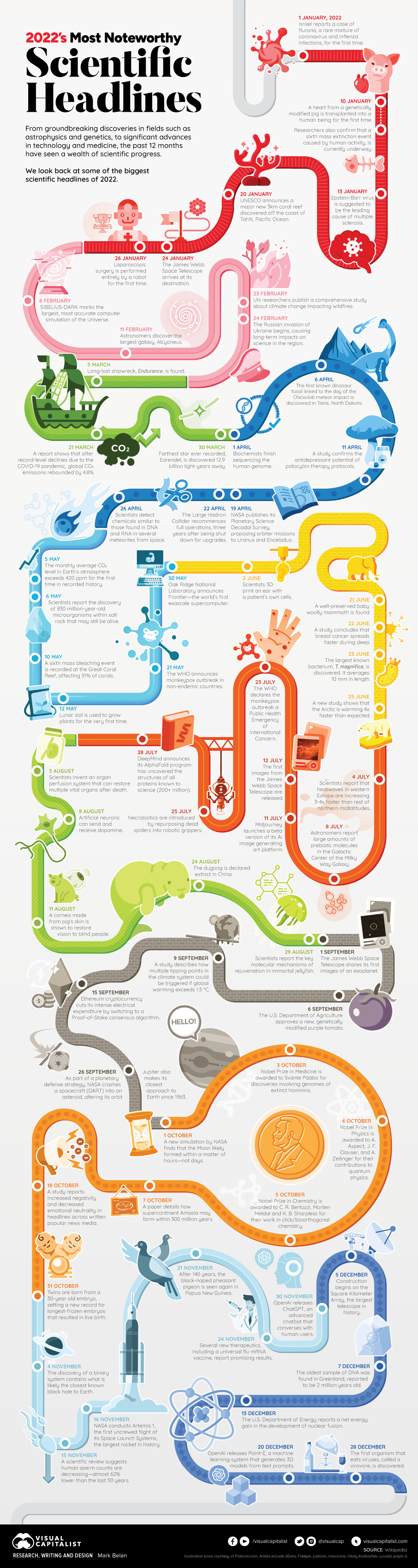

Timeline: The Most Important Science Headlines of 2022

Scientific discoveries and technological innovation play a vital role in addressing many of the challenges and crises that we face every year.

The last year may have come and gone quickly, but scientists and researchers have worked painstakingly hard to advance our knowledge within a number of disciplines, industries, and projects around the world.

Over the course of 2022, it’s easy to lose track of all the amazing stories in science and technology.

At a Glance: Major Scientific Headlines of 2022

Below we dive a little deeper into some of the most interesting headlines, while providing links in case you want to explore these developments further.

The James Webb Space Telescope Arrives at its Destination

What happened: A new space telescope brings promise of exciting findings and beautiful images from the final frontier. This telescope builds on the legacy of its predecessor, the Hubble Space Telescope, which launched over 30 years ago.

Why it matters: The James Webb Space Telescope is our latest state-of-the-art “window” into deep space. With more access to the infrared spectrum, new images, measurements, and observations of outer space will become available.

» To learn more, read this article from The Planetary Society, or watch this video from the Wall Street Journal.

Complete: The Human Genome

What happened: Scientists finish sequencing the human genome.

Why it matters: A complete human genome allows researchers to better understand the genetic basis of human traits and diseases. New therapies and treatments are likely to arise from this development.

» To learn more, watch this video by Two Minute Papers, or read this article from NIH

Monkeypox Breaks Out

What happened: A higher volume of cases of the monkeypox virus was reported in non-endemic countries.

Why it matters: Trailing in the shadow of a global pandemic, researchers are keeping a closer eye on how diseases spread. The sudden spike of multinational incidences of monkeypox raises questions about disease evolution and prevention.

» To learn more, read this article by the New York Times.

A Perfectly Preserved Woolly Mammoth

What happened: Gold miners unearth a 35,000 year old, well-preserved baby woolly mammoth in the Yukon tundra.

Why it matters: The mammoth, named Nun cho ga by the Tr’ondëk Hwëch’in First Nation, is the most complete specimen discovered in North America to date. Each new discovery allows paleontologists to broaden our knowledge of biodiversity and how life changes over time.

» To learn more, read this article from Smithsonian Magazine

The Rise of AI Art

What happened: Access to new computer programs, such as DALL-E and Midjourney, give members of the general public the ability to create images from text-prompts.

Why it matters: Widespread access to generative AI tools fuels inspiration—and controversy. Concern for artist rights and copyright violations grow as these programs potentially threaten to diminish creative labor.

» To learn more, read this article by MyModernMet, or watch this video by Cleo Abram.

Dead Organs Get a Second Chance

What happened: Researchers create a perfusion system that can revitalize organs after cellular death. Using a special mixture of blood and nutrients, organs of a dead pig can be sustained after death—and in some cases, even promote cellular repair.

Why it matters: This discovery could potentially lead to a greater shelf-life and supply of organs for transplant.

» To learn more, read this article by Scientific American, or this article from the New York Times

DART Delivers A Cosmic Nudge

What happened: NASA crashes a spacecraft into an asteroid just to see how much it would move. Dimorphos, a moonlet orbiting a larger asteroid called Didymos 6.8 million miles (11 million km) from Earth, is struck by the DART (Double Asteroid Redirection Test) spacecraft. NASA estimates that as much as 22 million pounds (10 million kg) was ejected after the impact.

Why it matters: Earth is constantly at risk of being struck by stray asteroids. Developing reliable methods of deflecting near-Earth objects could save us from meeting the same fate as the dinosaurs.

» To learn more, watch this video by Real Engineering, or read this article from Space.com

Falling Sperm Counts

What happened: A scientific review suggests human sperm counts are decreasing—up to 62% over the past 50 years.

Why it matters: A lower sperm count makes it more difficult to conceive naturally. Concerns about global declining male health also arise because sperm count is a marker for overall health. Researchers look to extraneous stressors that may be affecting this trend, such as diet, environment, or other means.

» To learn more, check out this article from the Guardian.

Finding Ancient DNA

What happened: Two million-year-old DNA is found in Greenland.

Why it matters: DNA is a record of biodiversity. Apart from showing that a desolate Arctic landscape was once teeming with life, ancient DNA gives hints about our advancement to modern life and how biodiversity evolves over time.

» To learn more, read this article from National Geographic

Fusing Energy

What happened: The U.S. Department of Energy reports achieving net energy gain for the first time in the development of nuclear fusion.

Why it matters: Fusion is often seen as the Holy Grail of safe clean energy, and this latest milestone brings researchers one step closer to harnessing nuclear fusion to power the world.

» To learn more, view our infographic on fusion, or read this article from BBC

Science in the New Year

The future of scientific research looks bright. Researchers and scientists are continuing to push the boundaries of what we know and understand about the world around us.

For 2023, some disciplines are likely to continue to dominate headlines:

- Advancement in space continues with projects like the James Webb Space Telescope and SETI COSMIC’s hunt for life beyond Earth

- Climate action may become more demanding as recovery and prevention from extreme weather events continue into the new year

- Generative AI tools such as DALL-e and ChatGPT were opened to public use in 2022, and ignited widespread interest in the potential of artificial intelligence

- Even amidst the lingering shadow of COVID-19, new therapeutics should advance medicine into new territories

Where science is going remains to be seen, but this past year instills faith that 2023 will be filled with even more progress.

Technology

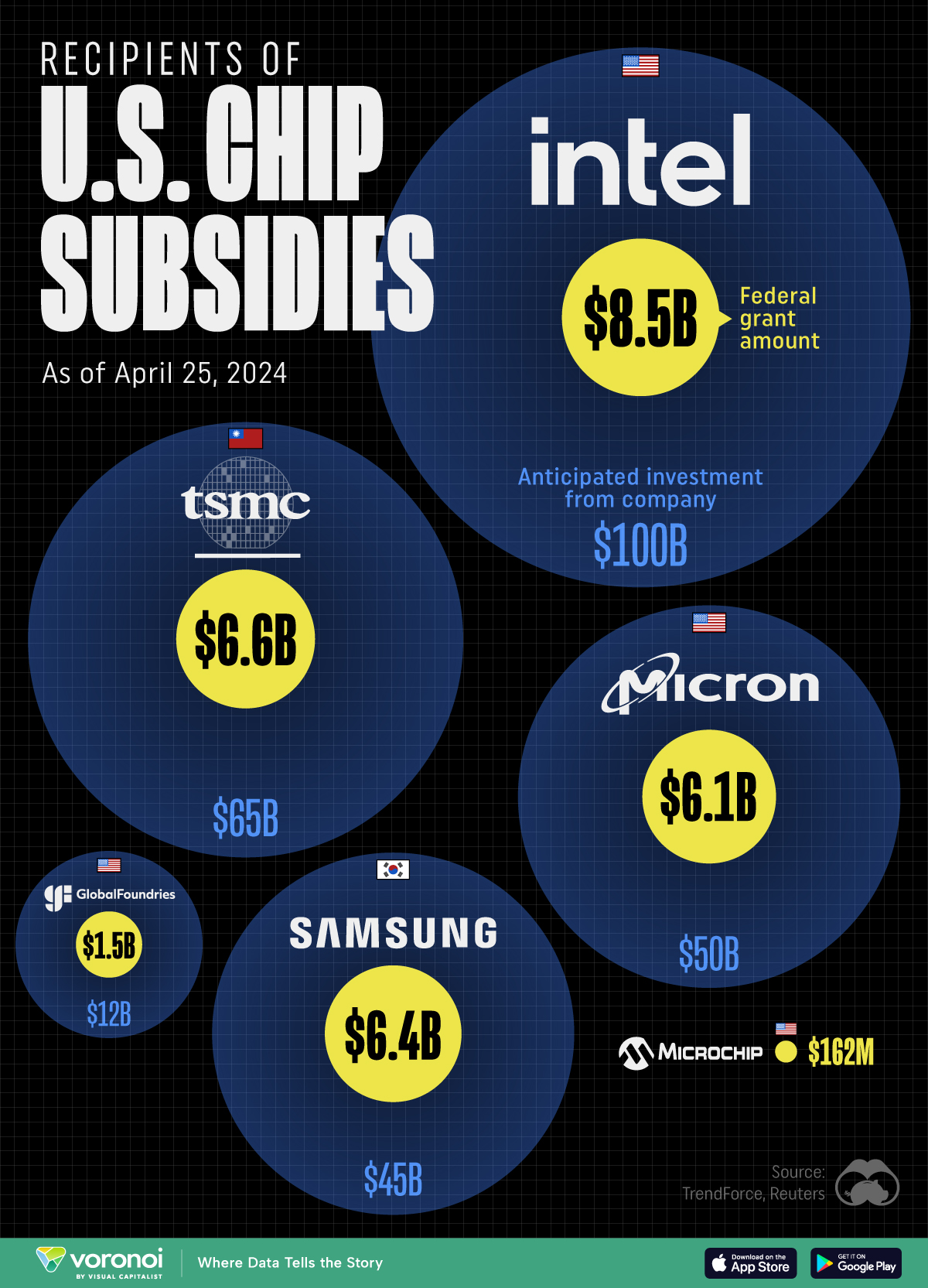

All of the Grants Given by the U.S. CHIPS Act

Intel, TSMC, and more have received billions in subsidies from the U.S. CHIPS Act in 2024.

All of the Grants Given by the U.S. CHIPS Act

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

This visualization shows which companies are receiving grants from the U.S. CHIPS Act, as of April 25, 2024. The CHIPS Act is a federal statute signed into law by President Joe Biden that authorizes $280 billion in new funding to boost domestic research and manufacturing of semiconductors.

The grant amounts visualized in this graphic are intended to accelerate the production of semiconductor fabrication plants (fabs) across the United States.

Data and Company Highlights

The figures we used to create this graphic were collected from a variety of public news sources. The Semiconductor Industry Association (SIA) also maintains a tracker for CHIPS Act recipients, though at the time of writing it does not have the latest details for Micron.

| Company | Federal Grant Amount | Anticipated Investment From Company |

|---|---|---|

| 🇺🇸 Intel | $8,500,000,000 | $100,000,000,000 |

| 🇹🇼 TSMC | $6,600,000,000 | $65,000,000,000 |

| 🇰🇷 Samsung | $6,400,000,000 | $45,000,000,000 |

| 🇺🇸 Micron | $6,100,000,000 | $50,000,000,000 |

| 🇺🇸 GlobalFoundries | $1,500,000,000 | $12,000,000,000 |

| 🇺🇸 Microchip | $162,000,000 | N/A |

| 🇬🇧 BAE Systems | $35,000,000 | N/A |

BAE Systems was not included in the graphic due to size limitations

Intel’s Massive Plans

Intel is receiving the largest share of the pie, with $8.5 billion in grants (plus an additional $11 billion in government loans). This grant accounts for 22% of the CHIPS Act’s total subsidies for chip production.

From Intel’s side, the company is expected to invest $100 billion to construct new fabs in Arizona and Ohio, while modernizing and/or expanding existing fabs in Oregon and New Mexico. Intel could also claim another $25 billion in credits through the U.S. Treasury Department’s Investment Tax Credit.

TSMC Expands its U.S. Presence

TSMC, the world’s largest semiconductor foundry company, is receiving a hefty $6.6 billion to construct a new chip plant with three fabs in Arizona. The Taiwanese chipmaker is expected to invest $65 billion into the project.

The plant’s first fab will be up and running in the first half of 2025, leveraging 4 nm (nanometer) technology. According to TrendForce, the other fabs will produce chips on more advanced 3 nm and 2 nm processes.

The Latest Grant Goes to Micron

Micron, the only U.S.-based manufacturer of memory chips, is set to receive $6.1 billion in grants to support its plans of investing $50 billion through 2030. This investment will be used to construct new fabs in Idaho and New York.

-

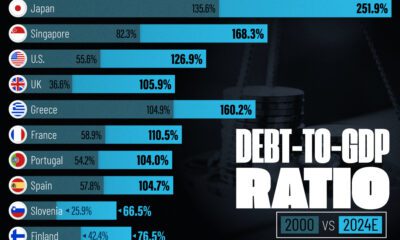

Debt1 week ago

Debt1 week agoHow Debt-to-GDP Ratios Have Changed Since 2000

-

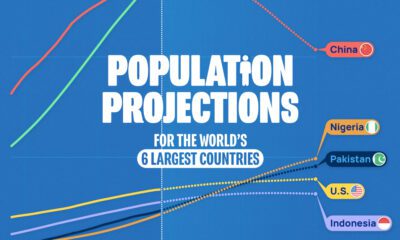

Countries2 weeks ago

Countries2 weeks agoPopulation Projections: The World’s 6 Largest Countries in 2075

-

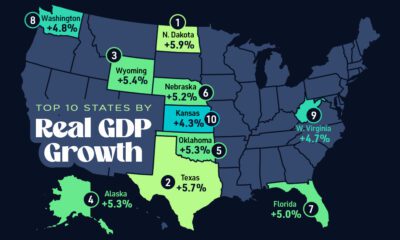

Markets2 weeks ago

Markets2 weeks agoThe Top 10 States by Real GDP Growth in 2023

-

Demographics2 weeks ago

Demographics2 weeks agoThe Smallest Gender Wage Gaps in OECD Countries

-

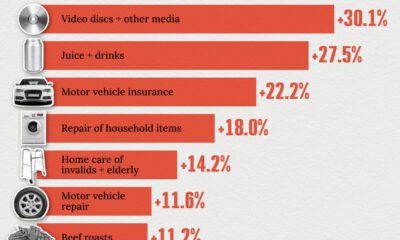

United States2 weeks ago

United States2 weeks agoWhere U.S. Inflation Hit the Hardest in March 2024

-

Green2 weeks ago

Green2 weeks agoTop Countries By Forest Growth Since 2001

-

United States2 weeks ago

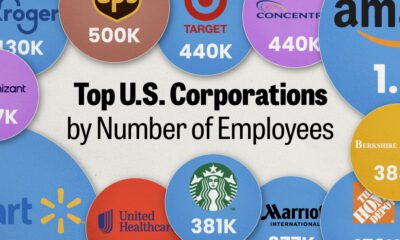

United States2 weeks agoRanked: The Largest U.S. Corporations by Number of Employees

-

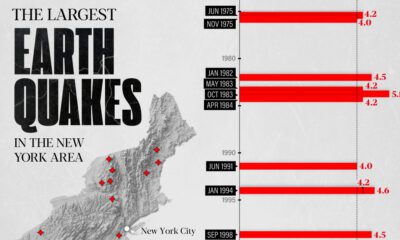

Maps2 weeks ago

Maps2 weeks agoThe Largest Earthquakes in the New York Area (1970-2024)