Technology

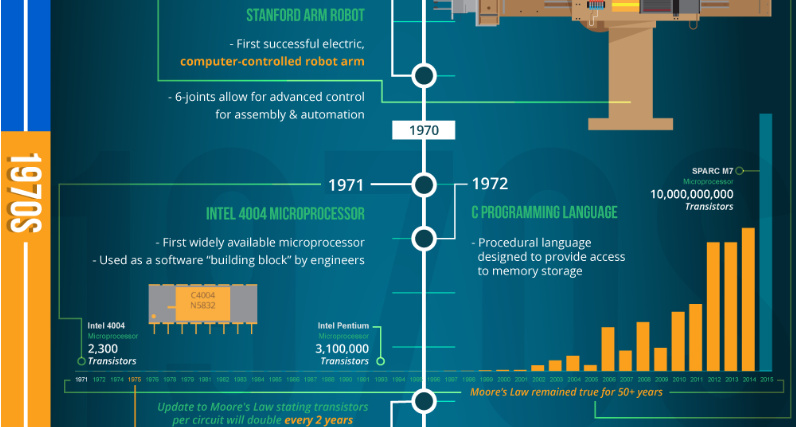

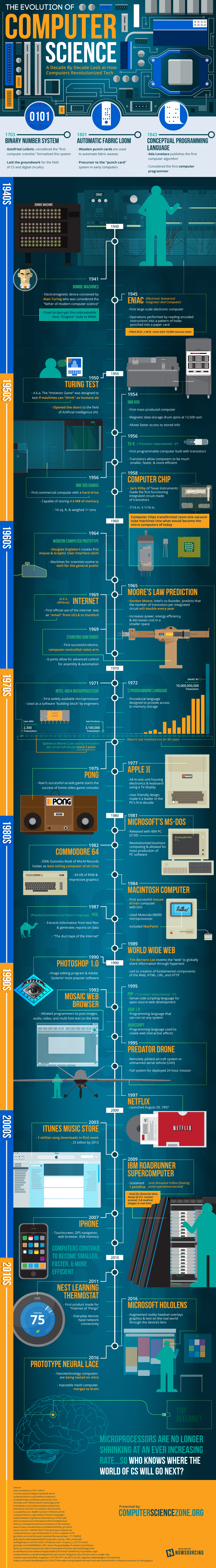

The Evolution of Computer Science in One Infographic

We take computing power for granted today.

That’s because computers are literally everywhere around us. And thanks to advances in technology and manufacturing, the cost of producing semiconductors is so low that we’ve even started turning things like toys and streetlights into computers.

But how and where did this familiar new era start?

The History of Computer Science

Today’s infographic comes to us from Computer Science Zone, and it describes the journey of how we got to today’s tech-oriented consumer society.

It may surprise you to learn that the humble and abstract groundwork of what we now call computer science goes all the way back to the beginning of the 18th century.

Incredibly, the history of computing goes all the way back to a famous mathematician named Gottfried Wilhem Leibniz.

Leibniz, a polymath living in the Holy Roman Empire in an area that is now modern-day Germany, was quite the talent. He independently developed the field of differential and integral calculus, developed his own mechanical calculators, and was a primary advocate of Rationalism.

It is arguable, however, that the modern impact of his work mostly stems from his formalization of the binary numerical system in 1703. He even envisioned a machine of the future that could use such a system of logic.

From Vacuums to Moore’s Law

The first computers, such as the IBM 650, used vacuum tube circuit modules for logic circuitry. Used up until the early 1960s, they required vast amounts of electricity, failed often, and required constant inspection for defective tubes. They were also the size of entire rooms.

Luckily, transistors were invented and then later integrated into circuits – and 1958 saw the production of the very first functioning integrated circuit by Jack Kilby of Texas Instruments. Shortly after, Gordon Moore of Intel predicted that the number of transistors per integrated circuit would double every year, a prediction now known as “Moore’s Law”.

Moore’s Law, which suggests exponential growth, continued for 50 years until it started scratching its upper limits.

It can’t continue forever. The nature of exponentials is that you push them out and eventually disaster happens.

– Gordon Moore in 2005

It’s now been argued by everyone from The Economist to the CEO of Nvidia that Moore’s Law is over for practical intents and purposes – but that doesn’t mean it’s the end of the road for computer science. In fact, it’s just the opposite.

The Next Computing Era

Computers no longer take up rooms – even very powerful ones now fit in the palm of your hand.

They are cheap enough to put in refrigerators, irrigation systems, thermostats, smoke detectors, cars, streetlights, and clothing. They can even be embedded in your skin.

The coming computing era will be dominated by artificial intelligence, the IoT, robotics, and unprecedented connectivity. And even if things are advancing at a sub-exponential rate, it will still be an incredible next step in the evolution of computer science.

Technology

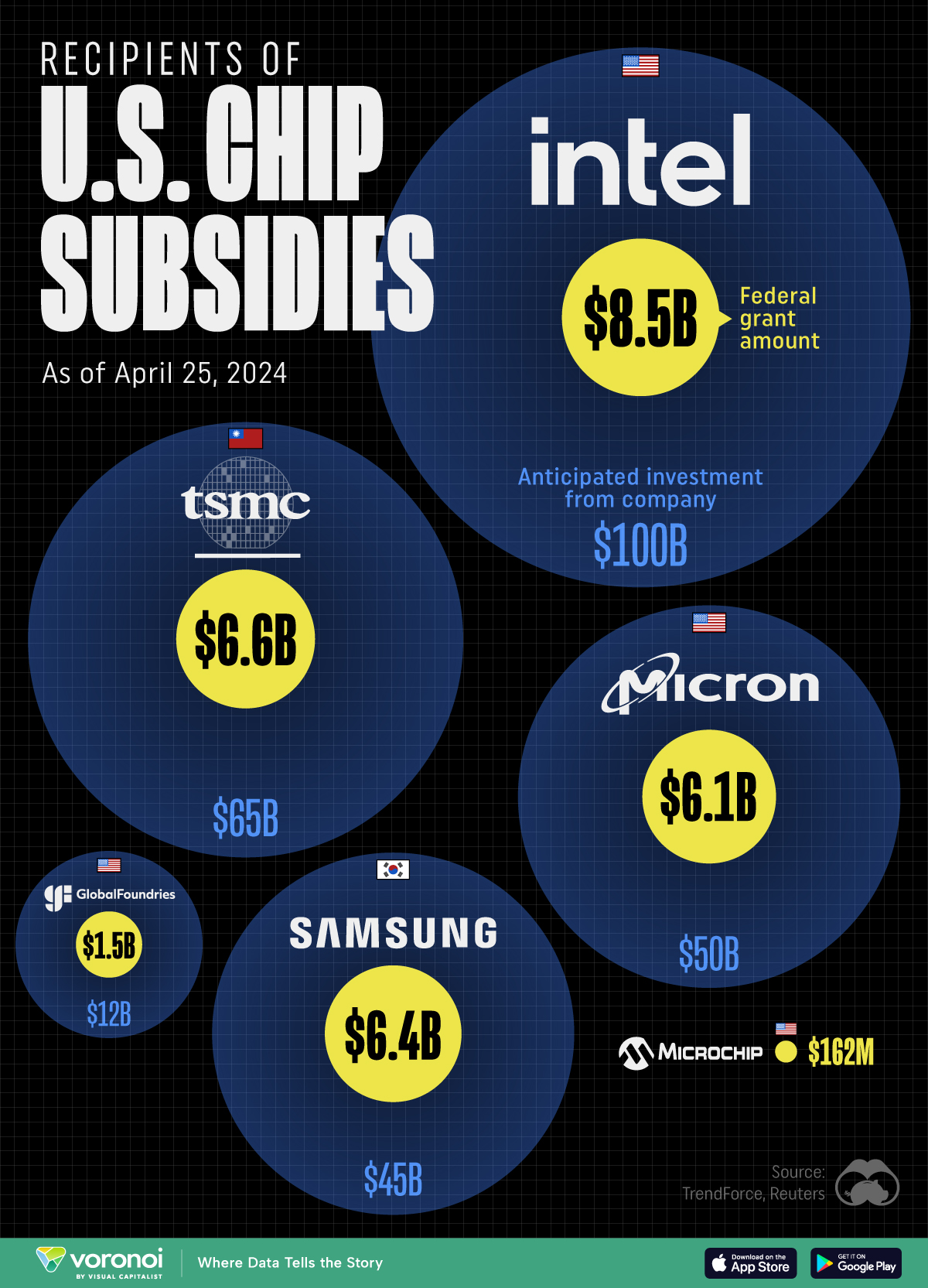

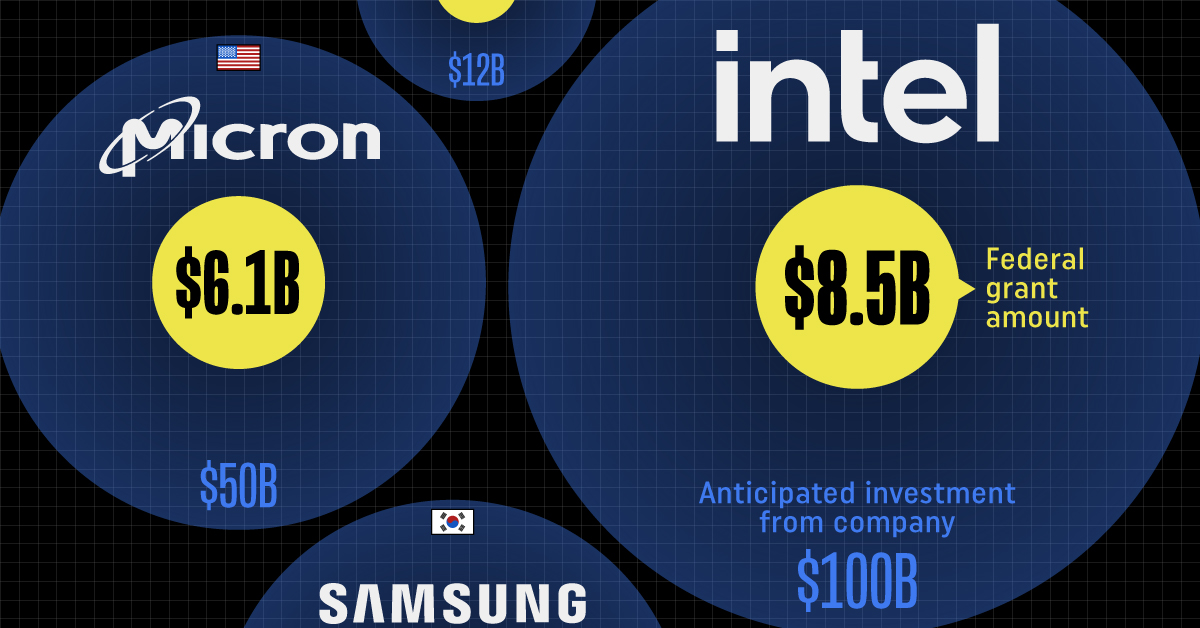

All of the Grants Given by the U.S. CHIPS Act

Intel, TSMC, and more have received billions in subsidies from the U.S. CHIPS Act in 2024.

All of the Grants Given by the U.S. CHIPS Act

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

This visualization shows which companies are receiving grants from the U.S. CHIPS Act, as of April 25, 2024. The CHIPS Act is a federal statute signed into law by President Joe Biden that authorizes $280 billion in new funding to boost domestic research and manufacturing of semiconductors.

The grant amounts visualized in this graphic are intended to accelerate the production of semiconductor fabrication plants (fabs) across the United States.

Data and Company Highlights

The figures we used to create this graphic were collected from a variety of public news sources. The Semiconductor Industry Association (SIA) also maintains a tracker for CHIPS Act recipients, though at the time of writing it does not have the latest details for Micron.

| Company | Federal Grant Amount | Anticipated Investment From Company |

|---|---|---|

| 🇺🇸 Intel | $8,500,000,000 | $100,000,000,000 |

| 🇹🇼 TSMC | $6,600,000,000 | $65,000,000,000 |

| 🇰🇷 Samsung | $6,400,000,000 | $45,000,000,000 |

| 🇺🇸 Micron | $6,100,000,000 | $50,000,000,000 |

| 🇺🇸 GlobalFoundries | $1,500,000,000 | $12,000,000,000 |

| 🇺🇸 Microchip | $162,000,000 | N/A |

| 🇬🇧 BAE Systems | $35,000,000 | N/A |

BAE Systems was not included in the graphic due to size limitations

Intel’s Massive Plans

Intel is receiving the largest share of the pie, with $8.5 billion in grants (plus an additional $11 billion in government loans). This grant accounts for 22% of the CHIPS Act’s total subsidies for chip production.

From Intel’s side, the company is expected to invest $100 billion to construct new fabs in Arizona and Ohio, while modernizing and/or expanding existing fabs in Oregon and New Mexico. Intel could also claim another $25 billion in credits through the U.S. Treasury Department’s Investment Tax Credit.

TSMC Expands its U.S. Presence

TSMC, the world’s largest semiconductor foundry company, is receiving a hefty $6.6 billion to construct a new chip plant with three fabs in Arizona. The Taiwanese chipmaker is expected to invest $65 billion into the project.

The plant’s first fab will be up and running in the first half of 2025, leveraging 4 nm (nanometer) technology. According to TrendForce, the other fabs will produce chips on more advanced 3 nm and 2 nm processes.

The Latest Grant Goes to Micron

Micron, the only U.S.-based manufacturer of memory chips, is set to receive $6.1 billion in grants to support its plans of investing $50 billion through 2030. This investment will be used to construct new fabs in Idaho and New York.

-

Debt1 week ago

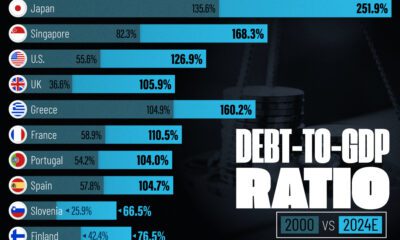

Debt1 week agoHow Debt-to-GDP Ratios Have Changed Since 2000

-

Markets2 weeks ago

Markets2 weeks agoRanked: The World’s Top Flight Routes, by Revenue

-

Countries2 weeks ago

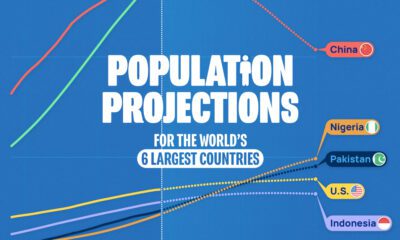

Countries2 weeks agoPopulation Projections: The World’s 6 Largest Countries in 2075

-

Markets2 weeks ago

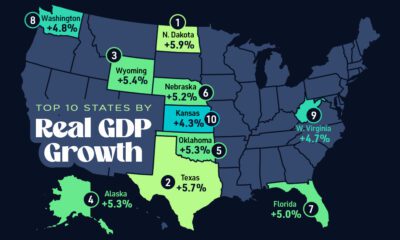

Markets2 weeks agoThe Top 10 States by Real GDP Growth in 2023

-

Demographics2 weeks ago

Demographics2 weeks agoThe Smallest Gender Wage Gaps in OECD Countries

-

United States2 weeks ago

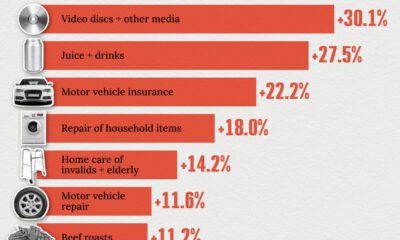

United States2 weeks agoWhere U.S. Inflation Hit the Hardest in March 2024

-

Green2 weeks ago

Green2 weeks agoTop Countries By Forest Growth Since 2001

-

United States2 weeks ago

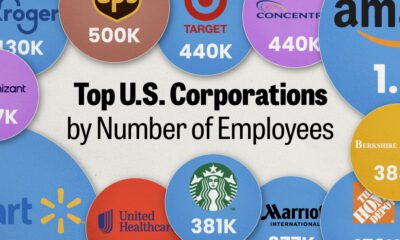

United States2 weeks agoRanked: The Largest U.S. Corporations by Number of Employees