Technology

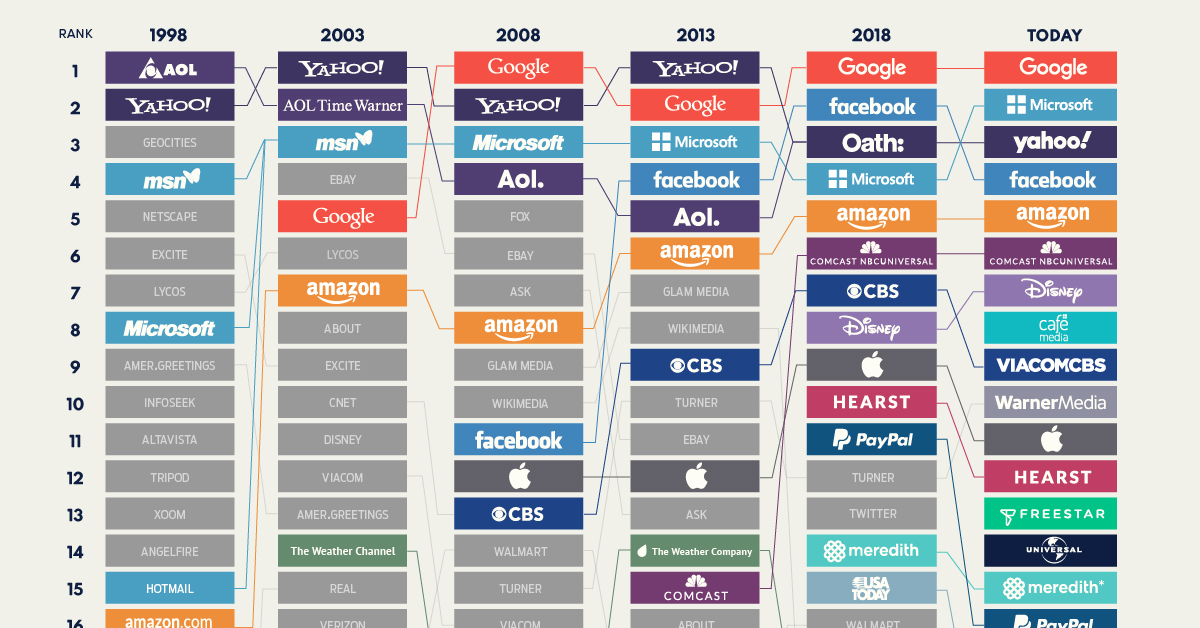

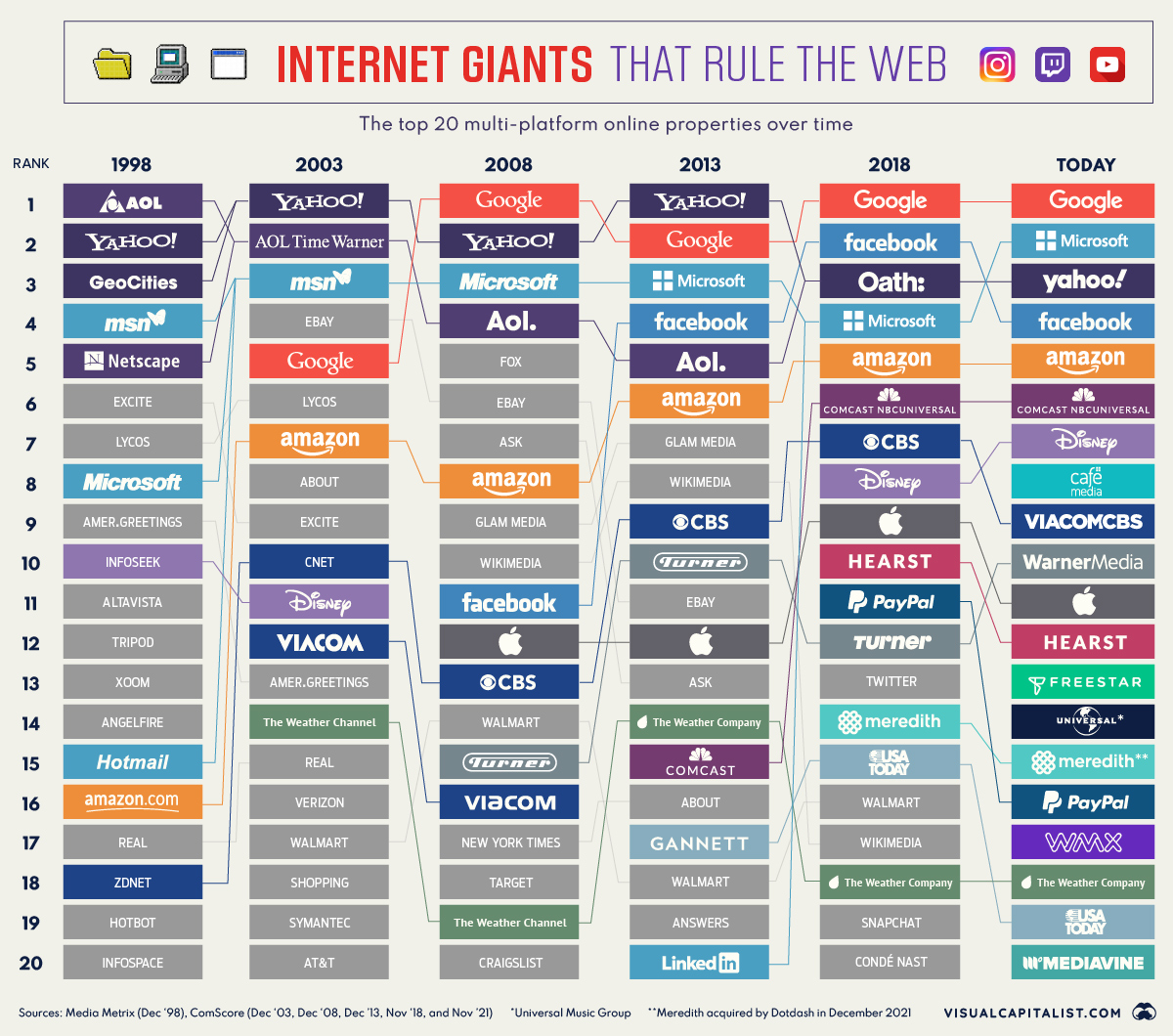

The 20 Internet Giants That Rule the Web

The 20 Internet Giants That Rule the Web (1998-Today)

With each passing year, an increasingly large segment of the population no longer remembers images loading a single pixel row at a time, the earsplitting sound of a 56k modem, or the early domination of web portals.

Many of the top websites in 1998 were news aggregators or search portals, which are easy concepts to understand. Today, brand touch-points are often spread out between devices (e.g. mobile apps vs. desktop) and a myriad of services and sub-brands (e.g. Facebook’s constellation of apps). As a result, the world’s biggest websites are complex, interconnected web properties.

The visualization above, which primarily uses data from ComScore’s U.S. Multi-Platform Properties ranking, looks at which of the internet giants have evolved to stay on top, and which have faded into internet lore.

America Moves Online

For millions of curious people the late ’90s, the iconic AOL compact disc was the key that opened the door to the World Wide Web. At its peak, an estimated 35 million people accessed the internet using AOL, and the company rode the Dotcom bubble to dizzying heights, reaching a valuation of $222 billion dollars in 1999.

AOL’s brand may not carry the caché it once did, but the brand never completely faded into obscurity. The company continually evolved, finally merging with Yahoo after Verizon acquired both of the legendary online brands. Verizon had high hopes for the company—called Oath—to evolve into a “third option” for advertisers and users who were fed up with Google and Facebook.

Sadly, those ambitions did not materialize as planned. In 2019, Oath was renamed Verizon Media, and was eventually sold once again in 2021.

A City of Gifs and Web Logs

As internet usage began to reach critical mass, web hosts such as AngelFire and GeoCities made it easy for people to create a new home on the Web.

GeoCities, in particular, made a huge impact on the early internet, hosting millions of websites and giving people a way to actually participate in creating online content. If it were a physical community of “home” pages, it would’ve been the third largest city in America, after Los Angeles.

This early online community was at risk of being erased permanently when GeoCities was finally shuttered by Yahoo in 2009, but luckily, the nonprofit Internet Archive took special efforts to create a thorough record of GeoCities-hosted pages.

From A to Z

In December of 1998, long before Amazon became the well-oiled retail machine we know today, the company was in the midst of a massive holiday season crunch.

In the real world, employees were pulling long hours and even sleeping in cars to keep the goods flowing, while online, Amazon.com had become one of the biggest sites on the internet as people began to get comfortable with the idea of purchasing goods online. Demand surged as the company began to expand their offering beyond books.

Amazon.com has grown to be the most successful merchant on the Internet.

– New York Times (1998)

Digital Magazine Rack

Meredith will be an unfamiliar brand to many people looking at today’s top 20 list. While Meredith may not be a household name, the company controlled many of the country’s most popular magazine brands (People, AllRecipes, Martha Stewart, Health, etc.) including their sizable digital footprints. The company also owned a slew of local television networks around the United States.

After its acquisition of Time Inc. in 2017, Meredith became the largest magazine publisher in the world. Since then, however, Meredith has divested many of its most valuable assets (Time, Sports Illustrated, Fortune). In December 2021, Meredith merged with IAC’s Dotdash.

“Hey, Google”

When people have burning questions, they increasingly turn to the internet for answers, but the diversity of sources for those answers is shrinking.

Even as recently as 2013, we can see that About.com, Ask.com, and Answers.com were still among the biggest websites in America. Today though, Google appears to have cemented its status as a universal wellspring of answers.

As smart speakers and voice assistants continue penetrate the market and influence search behavior, Google is unlikely to face any near-term competition from any company not already in the top 20 list.

New Kids on the Block

Social media has long since outgrown its fad stage and is now a common digital thread connecting people across the world. While Facebook rapidly jumped into the top 20 by 2007, other social media infused brands took longer to grow into internet giants.

By 2018, Twitter, Snapchat, and Facebook’s umbrella of platforms were all in the top 20, and you can see a more detailed and up-to-date breakdown of the social media universe here.

A Tangled Web

Today’s internet giants have evolved far beyond their ancestors from two decades ago. Many of the companies in the top 20 run numerous platforms and content streams, and more often than not, they are not household names.

A few, such as Mediavine and CafeMedia, are services that manage ads. Others manage content distribution, such as music, or manage a constellation of smaller media properties, as is the case with Hearst.

Lastly, there are still the tech giants. Remarkably, three of the top five web properties were in the top 20 list in 1998. In the fast-paced digital ecosystem, that’s some remarkable staying power.

This article was inspired by an earlier work by Philip Bump, published in the Washington Post.

Technology

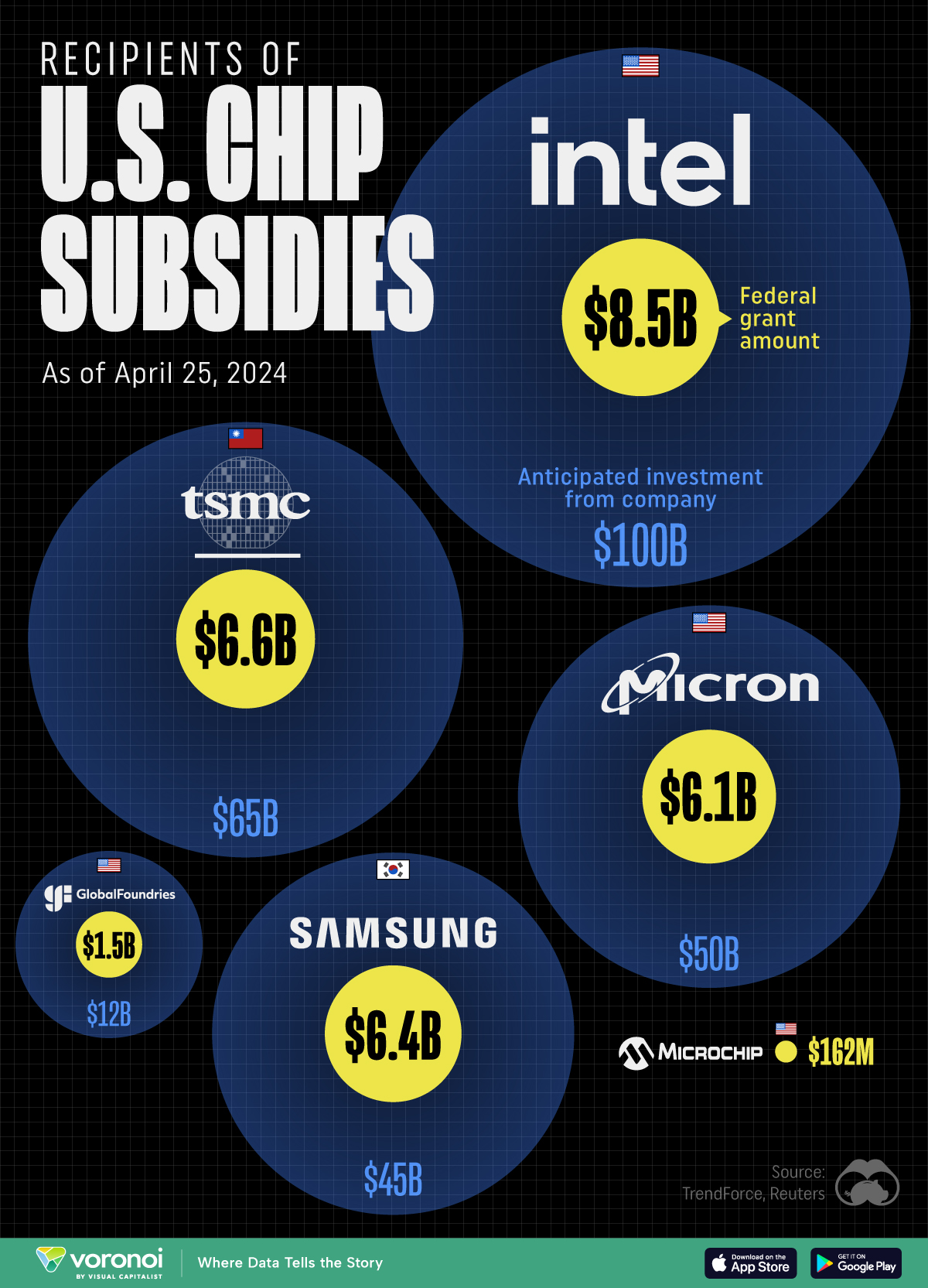

All of the Grants Given by the U.S. CHIPS Act

Intel, TSMC, and more have received billions in subsidies from the U.S. CHIPS Act in 2024.

All of the Grants Given by the U.S. CHIPS Act

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

This visualization shows which companies are receiving grants from the U.S. CHIPS Act, as of April 25, 2024. The CHIPS Act is a federal statute signed into law by President Joe Biden that authorizes $280 billion in new funding to boost domestic research and manufacturing of semiconductors.

The grant amounts visualized in this graphic are intended to accelerate the production of semiconductor fabrication plants (fabs) across the United States.

Data and Company Highlights

The figures we used to create this graphic were collected from a variety of public news sources. The Semiconductor Industry Association (SIA) also maintains a tracker for CHIPS Act recipients, though at the time of writing it does not have the latest details for Micron.

| Company | Federal Grant Amount | Anticipated Investment From Company |

|---|---|---|

| 🇺🇸 Intel | $8,500,000,000 | $100,000,000,000 |

| 🇹🇼 TSMC | $6,600,000,000 | $65,000,000,000 |

| 🇰🇷 Samsung | $6,400,000,000 | $45,000,000,000 |

| 🇺🇸 Micron | $6,100,000,000 | $50,000,000,000 |

| 🇺🇸 GlobalFoundries | $1,500,000,000 | $12,000,000,000 |

| 🇺🇸 Microchip | $162,000,000 | N/A |

| 🇬🇧 BAE Systems | $35,000,000 | N/A |

BAE Systems was not included in the graphic due to size limitations

Intel’s Massive Plans

Intel is receiving the largest share of the pie, with $8.5 billion in grants (plus an additional $11 billion in government loans). This grant accounts for 22% of the CHIPS Act’s total subsidies for chip production.

From Intel’s side, the company is expected to invest $100 billion to construct new fabs in Arizona and Ohio, while modernizing and/or expanding existing fabs in Oregon and New Mexico. Intel could also claim another $25 billion in credits through the U.S. Treasury Department’s Investment Tax Credit.

TSMC Expands its U.S. Presence

TSMC, the world’s largest semiconductor foundry company, is receiving a hefty $6.6 billion to construct a new chip plant with three fabs in Arizona. The Taiwanese chipmaker is expected to invest $65 billion into the project.

The plant’s first fab will be up and running in the first half of 2025, leveraging 4 nm (nanometer) technology. According to TrendForce, the other fabs will produce chips on more advanced 3 nm and 2 nm processes.

The Latest Grant Goes to Micron

Micron, the only U.S.-based manufacturer of memory chips, is set to receive $6.1 billion in grants to support its plans of investing $50 billion through 2030. This investment will be used to construct new fabs in Idaho and New York.

-

Energy1 week ago

Energy1 week agoThe World’s Biggest Nuclear Energy Producers

-

Money2 weeks ago

Money2 weeks agoWhich States Have the Highest Minimum Wage in America?

-

Technology2 weeks ago

Technology2 weeks agoRanked: Semiconductor Companies by Industry Revenue Share

-

Markets2 weeks ago

Markets2 weeks agoRanked: The World’s Top Flight Routes, by Revenue

-

Countries2 weeks ago

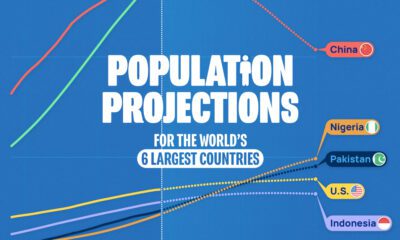

Countries2 weeks agoPopulation Projections: The World’s 6 Largest Countries in 2075

-

Markets2 weeks ago

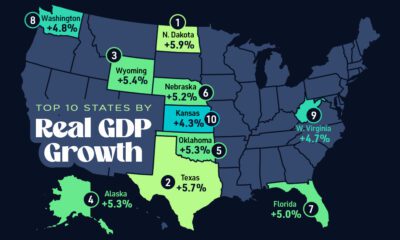

Markets2 weeks agoThe Top 10 States by Real GDP Growth in 2023

-

Demographics2 weeks ago

Demographics2 weeks agoThe Smallest Gender Wage Gaps in OECD Countries

-

United States2 weeks ago

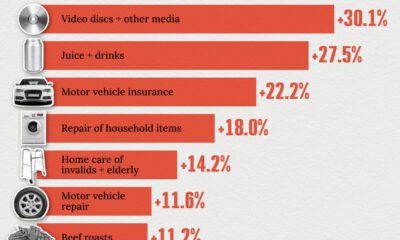

United States2 weeks agoWhere U.S. Inflation Hit the Hardest in March 2024