Technology

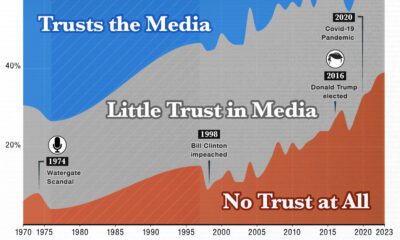

Here’s 13 Ideas to Fight Fake News – and a Big Problem With All of Them

Will humans or computer algorithms be the future arbiters of “truth”?

Today’s infographic from Futurism sums up the ideas that academics, technologists, and other experts are proposing that we implement to stop the spread of fake news.

Below the infographic, we raise our concerns about these methods.

While fake news is certainly problematic, the solutions proposed to penalize articles deemed to be “untrue” are just as scary.

By centralizing fact checking, a system is created that is inherently fragile, biased, and prone for abuse. Furthermore, the idea of axing websites that are deemed to be “untrue” is an initiative that limits independent thought and discourse, while allowing legacy media to remain entrenched.

Centralizing “Truth”

It could be argued that the best thing about the internet is that it decentralizes content, allowing for any individual, blog, or independent media company to stimulate discussion and new ideas with low barriers to entry. Millions of new entrants have changed the media landscape, and it has left traditional media flailing to find ways to adjust revenue models while keeping their influence intact.

If we say that “truth” can only be verified by a centralized mechanism – a group of people, or an algorithm written by a group of people – we are welcoming the idea that arbitrary sources will be preferred, while others will not (unless they conform to certain standards).

Based on this mechanism, it is almost certain that well-established journalistic sources like The New York Times or The Washington Post will be the most trusted. By the same token, newer sources (like independent media, or blogs written by emerging thought leaders) will not be able to get traction unless they are referencing or receiving backing from these “trusted” gatekeepers.

The Impact?

This centralization is problematic – and here’s a step-by-step reasoning of why that is the case:

First, either method (human or computer) must rely on preconceived notions of what is “authoritative” and “true” to make decisions. Both will be biased in some way. Humans will lean towards a particular consensus or viewpoint, while computers must rank authority based on different factors (Pagerank, backlinks, source recognition, or headline/content analysis).

Next, there is a snowball effect involved: if only posts referencing these authoritative sources of “truth” can get traction on social media, then these sources become even more authoritative over time. This creates entrenchment that will be difficult to overcome, and new bloggers or media outlets will only be able to move up the ladder by associating their posts with an existing consensus. Grassroot movements and new ideas will suffer – especially those that conflict with mainstream beliefs, government, or corporate power.

Finally, this raises concerns about who fact checks the fact checkers. Forbes has a great post on this, showing that Snopes.com (a fact checker) could not even verify basic truths about its own operations.

Removing articles deemed to be “untrue” is a form of censorship. While it may help to remove many ridiculous articles from people’s social feeds, it will also impact the qualities of the internet that make it so great in the first place: its decentralized nature, and the ability for any one person to make a profound impact on the world.

Technology

Visualizing AI Patents by Country

See which countries have been granted the most AI patents each year, from 2012 to 2022.

Visualizing AI Patents by Country

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

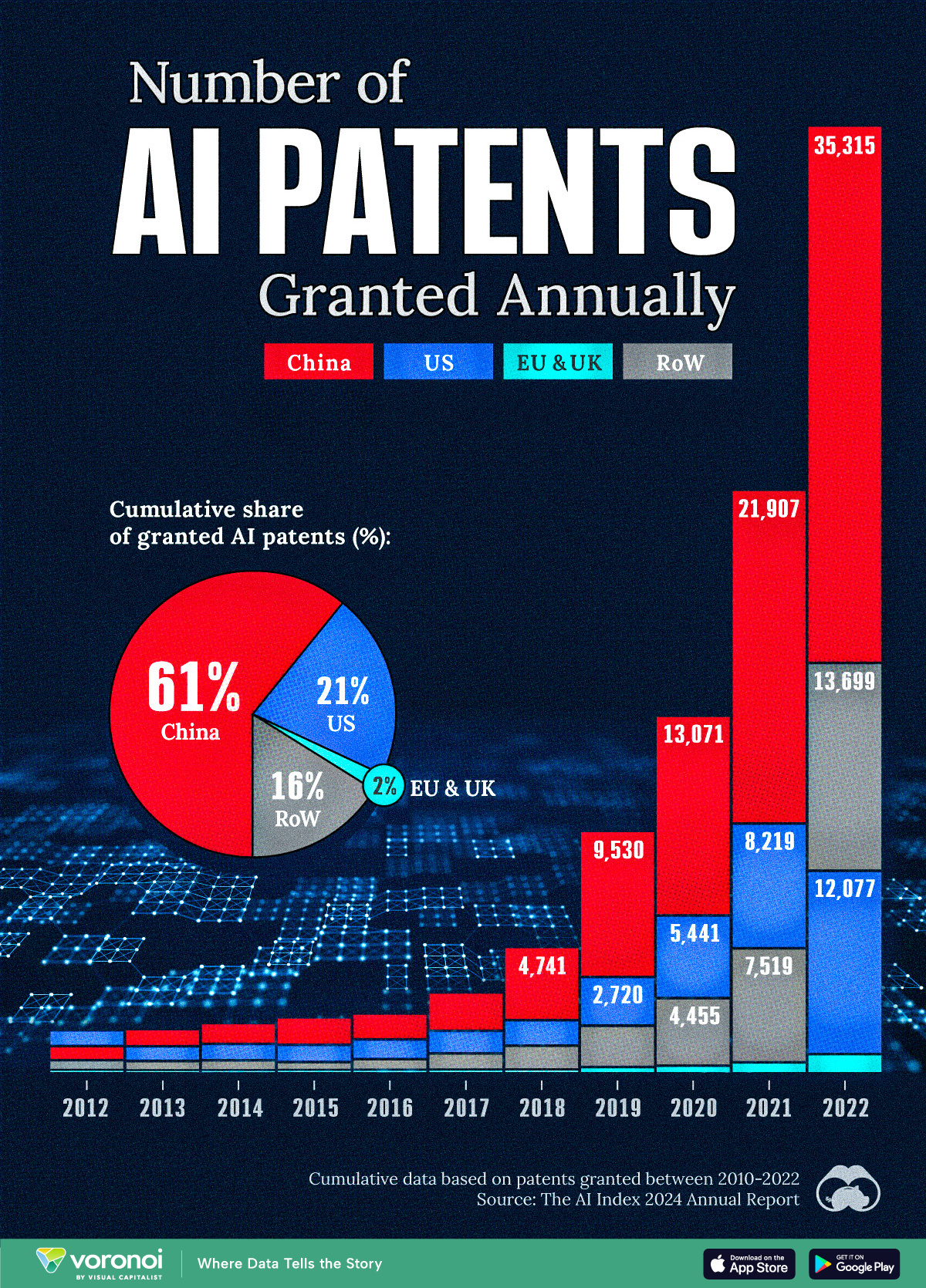

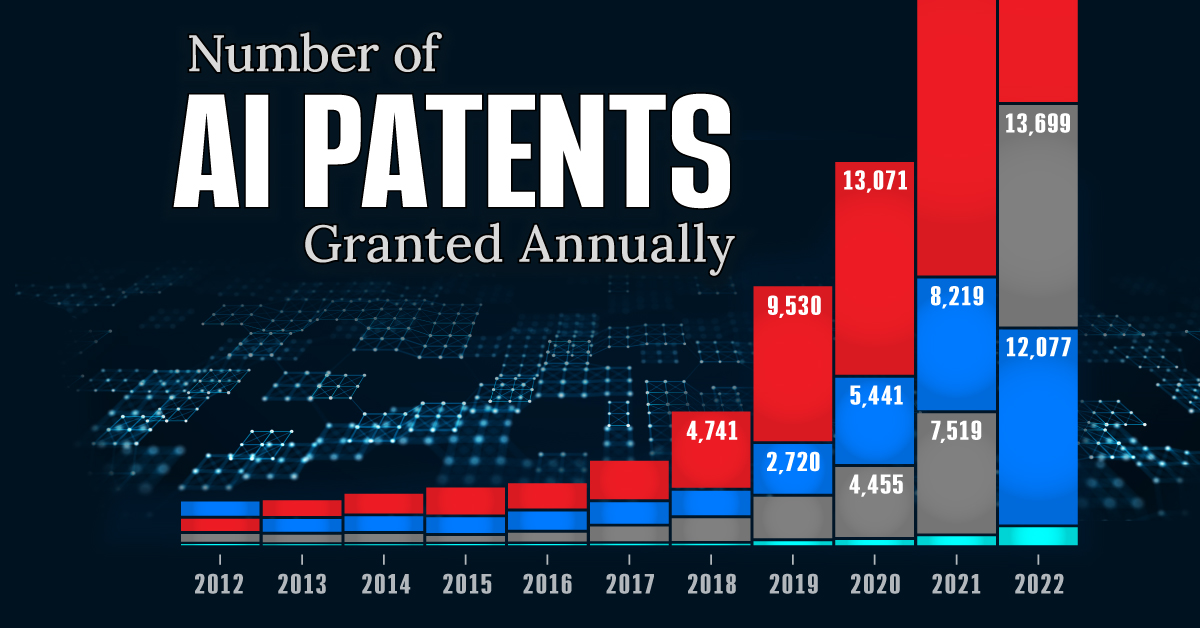

This infographic shows the number of AI-related patents granted each year from 2010 to 2022 (latest data available). These figures come from the Center for Security and Emerging Technology (CSET), accessed via Stanford University’s 2024 AI Index Report.

From this data, we can see that China first overtook the U.S. in 2013. Since then, the country has seen enormous growth in the number of AI patents granted each year.

| Year | China | EU and UK | U.S. | RoW | Global Total |

|---|---|---|---|---|---|

| 2010 | 307 | 137 | 984 | 571 | 1,999 |

| 2011 | 516 | 129 | 980 | 581 | 2,206 |

| 2012 | 926 | 112 | 950 | 660 | 2,648 |

| 2013 | 1,035 | 91 | 970 | 627 | 2,723 |

| 2014 | 1,278 | 97 | 1,078 | 667 | 3,120 |

| 2015 | 1,721 | 110 | 1,135 | 539 | 3,505 |

| 2016 | 1,621 | 128 | 1,298 | 714 | 3,761 |

| 2017 | 2,428 | 144 | 1,489 | 1,075 | 5,136 |

| 2018 | 4,741 | 155 | 1,674 | 1,574 | 8,144 |

| 2019 | 9,530 | 322 | 3,211 | 2,720 | 15,783 |

| 2020 | 13,071 | 406 | 5,441 | 4,455 | 23,373 |

| 2021 | 21,907 | 623 | 8,219 | 7,519 | 38,268 |

| 2022 | 35,315 | 1,173 | 12,077 | 13,699 | 62,264 |

In 2022, China was granted more patents than every other country combined.

While this suggests that the country is very active in researching the field of artificial intelligence, it doesn’t necessarily mean that China is the farthest in terms of capability.

Key Facts About AI Patents

According to CSET, AI patents relate to mathematical relationships and algorithms, which are considered abstract ideas under patent law. They can also have different meaning, depending on where they are filed.

In the U.S., AI patenting is concentrated amongst large companies including IBM, Microsoft, and Google. On the other hand, AI patenting in China is more distributed across government organizations, universities, and tech firms (e.g. Tencent).

In terms of focus area, China’s patents are typically related to computer vision, a field of AI that enables computers and systems to interpret visual data and inputs. Meanwhile America’s efforts are more evenly distributed across research fields.

Learn More About AI From Visual Capitalist

If you want to see more data visualizations on artificial intelligence, check out this graphic that shows which job departments will be impacted by AI the most.

-

Mining1 week ago

Mining1 week agoGold vs. S&P 500: Which Has Grown More Over Five Years?

-

Markets2 weeks ago

Markets2 weeks agoRanked: The Most Valuable Housing Markets in America

-

Money2 weeks ago

Money2 weeks agoWhich States Have the Highest Minimum Wage in America?

-

AI2 weeks ago

AI2 weeks agoRanked: Semiconductor Companies by Industry Revenue Share

-

Markets2 weeks ago

Markets2 weeks agoRanked: The World’s Top Flight Routes, by Revenue

-

Countries2 weeks ago

Countries2 weeks agoPopulation Projections: The World’s 6 Largest Countries in 2075

-

Markets2 weeks ago

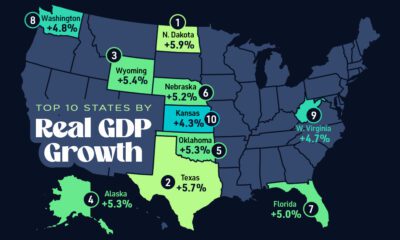

Markets2 weeks agoThe Top 10 States by Real GDP Growth in 2023

-

Demographics2 weeks ago

Demographics2 weeks agoThe Smallest Gender Wage Gaps in OECD Countries