Technology

Charted: The Exponential Growth in AI Computation

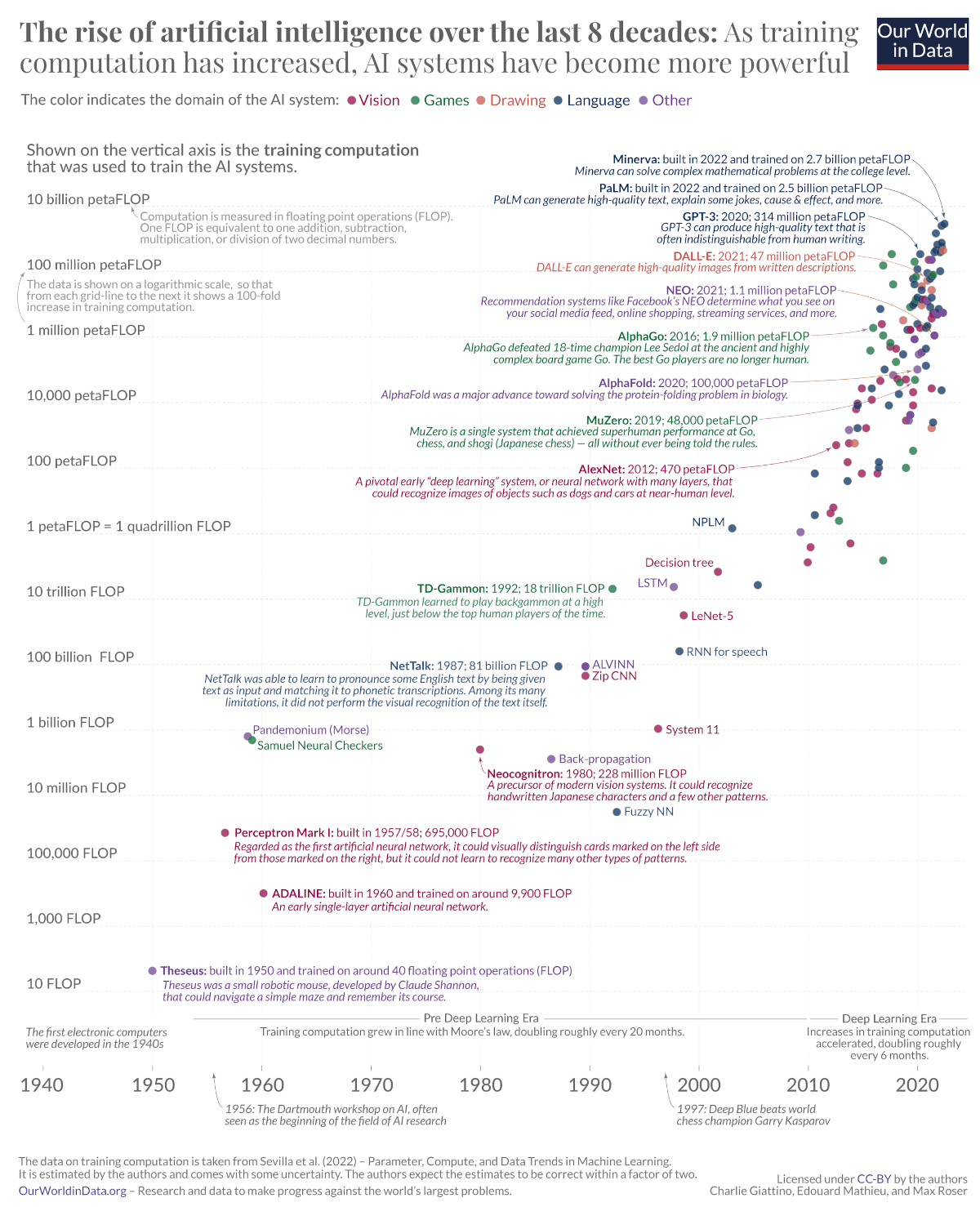

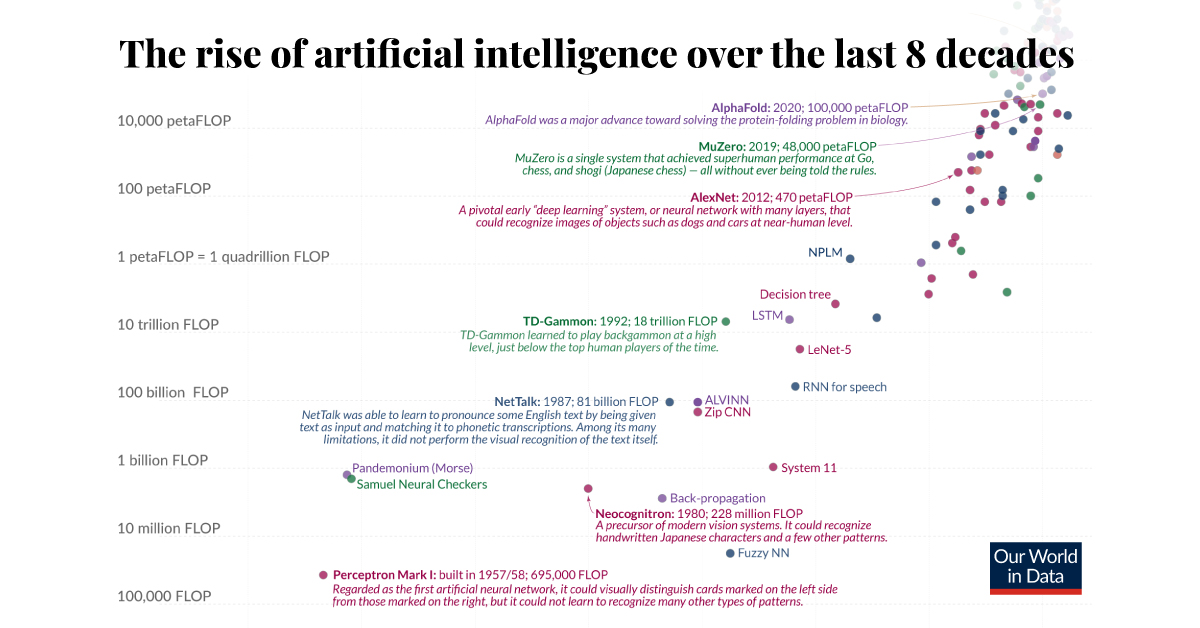

Click to view this graphic in a higher-resolution.

Charted: The Exponential Growth in AI Computation

Electronic computers had barely been around for a decade in the 1940s, before experiments with AI began. Now we have AI models that can write poetry and generate images from textual prompts. But what’s led to such exponential growth in such a short time?

This chart from Our World in Data tracks the history of AI through the amount of computation power used to train an AI model, using data from Epoch AI.

The Three Eras of AI Computation

In the 1950s, American mathematician Claude Shannon trained a robotic mouse called Theseus to navigate a maze and remember its course—the first apparent artificial learning of any kind.

Theseus was built on 40 floating point operations (FLOPs), a unit of measurement used to count the number of basic arithmetic operations (addition, subtraction, multiplication, or division) that a computer or processor can perform in one second.

Computation power, availability of training data, and algorithms are the three main ingredients to AI progress. And for the first few decades of AI advances, compute, which is the computational power needed to train an AI model, grew according to Moore’s Law.

| Period | Era | Compute Doubling |

|---|---|---|

| 1950–2010 | Pre-Deep Learning | 18–24 months |

| 2010–2016 | Deep Learning | 5–7 months |

| 2016–2022 | Large-scale models | 11 months |

Source: “Compute Trends Across Three Eras of Machine Learning” by Sevilla et. al, 2022.

However, at the start of the Deep Learning Era, heralded by AlexNet (an image recognition AI) in 2012, that doubling timeframe shortened considerably to six months, as researchers invested more in computation and processors.

With the emergence of AlphaGo in 2015—a computer program that beat a human professional Go player—researchers have identified a third era: that of the large-scale AI models whose computation needs dwarf all previous AI systems.

Predicting AI Computation Progress

Looking back at the only the last decade itself, compute has grown so tremendously it’s difficult to comprehend.

For example, the compute used to train Minerva, an AI which can solve complex math problems, is nearly 6 million times that which was used to train AlexNet 10 years ago.

Here’s a list of important AI models through history and the amount of compute used to train them.

| AI | Year | FLOPs |

|---|---|---|

| Theseus | 1950 | 40 |

| Perceptron Mark I | 1957–58 | 695,000 |

| Neocognitron | 1980 | 228 million |

| NetTalk | 1987 | 81 billion |

| TD-Gammon | 1992 | 18 trillion |

| NPLM | 2003 | 1.1 petaFLOPs |

| AlexNet | 2012 | 470 petaFLOPs |

| AlphaGo | 2016 | 1.9 million petaFLOPs |

| GPT-3 | 2020 | 314 million petaFLOPs |

| Minerva | 2022 | 2.7 billion petaFLOPs |

Note: One petaFLOP = one quadrillion FLOPs. Source: “Compute Trends Across Three Eras of Machine Learning” by Sevilla et. al, 2022.

The result of this growth in computation, along with the availability of massive data sets and better algorithms, has yielded a lot of AI progress in seemingly very little time. Now AI doesn’t just match, but also beats human performance in many areas.

It’s difficult to say if the same pace of computation growth will be maintained. Large-scale models require increasingly more compute power to train, and if computation doesn’t continue to ramp up it could slow down progress. Exhausting all the data currently available for training AI models could also impede the development and implementation of new models.

However with all the funding poured into AI recently, perhaps more breakthroughs are around the corner—like matching the computation power of the human brain.

Where Does This Data Come From?

Source: “Compute Trends Across Three Eras of Machine Learning” by Sevilla et. al, 2022.

Note: The time estimated to for computation to double can vary depending on different research attempts, including Amodei and Hernandez (2018) and Lyzhov (2021). This article is based on our source’s findings. Please see their full paper for further details. Furthermore, the authors are cognizant of the framing concerns with deeming an AI model “regular-sized” or “large-sized” and said further research is needed in the area.

Methodology: The authors of the paper used two methods to determine the amount of compute used to train AI Models: counting the number of operations and tracking GPU time. Both approaches have drawbacks, namely: a lack of transparency with training processes and severe complexity as ML models grow.

This article was published as a part of Visual Capitalist's Creator Program, which features data-driven visuals from some of our favorite Creators around the world.

Digital Transformation

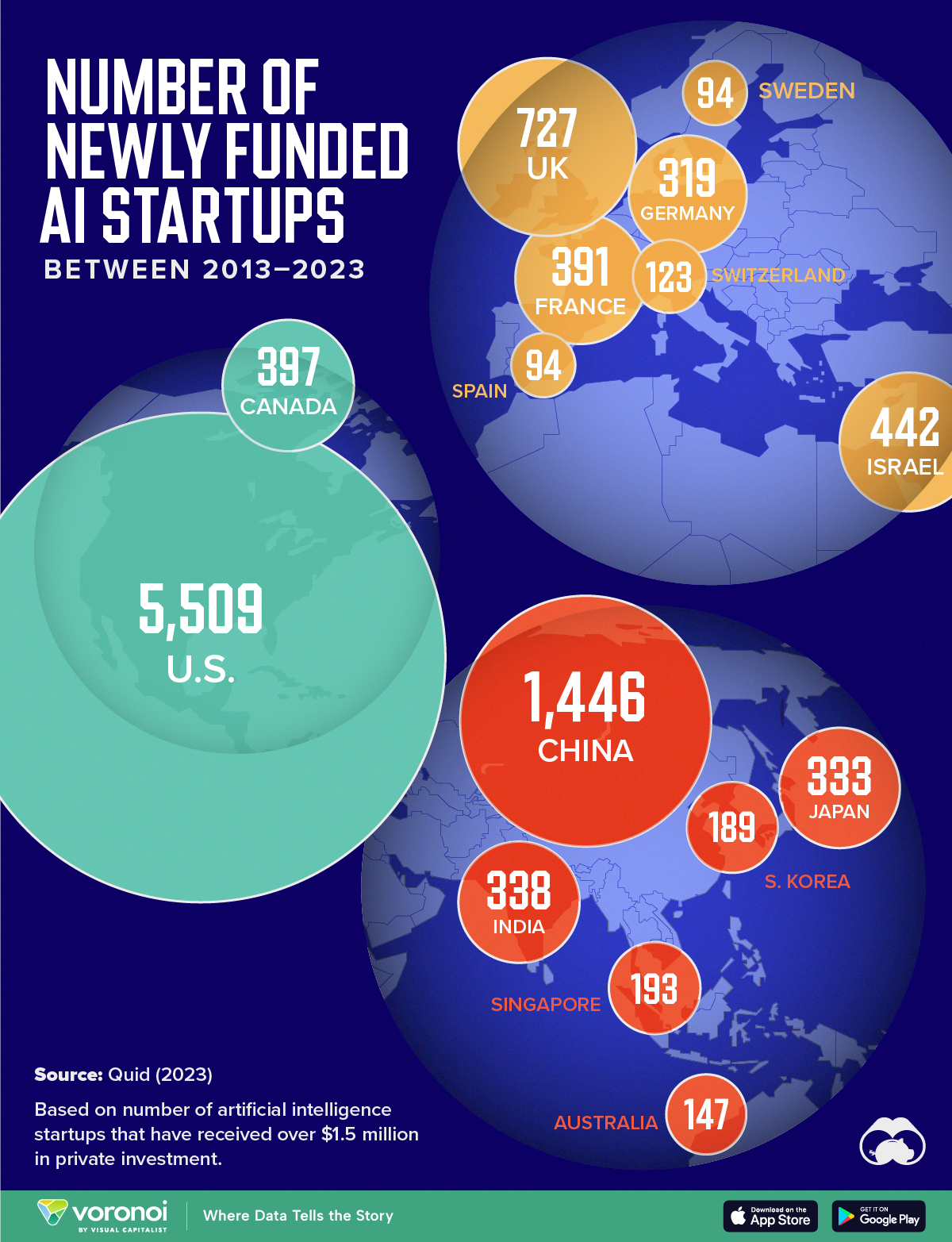

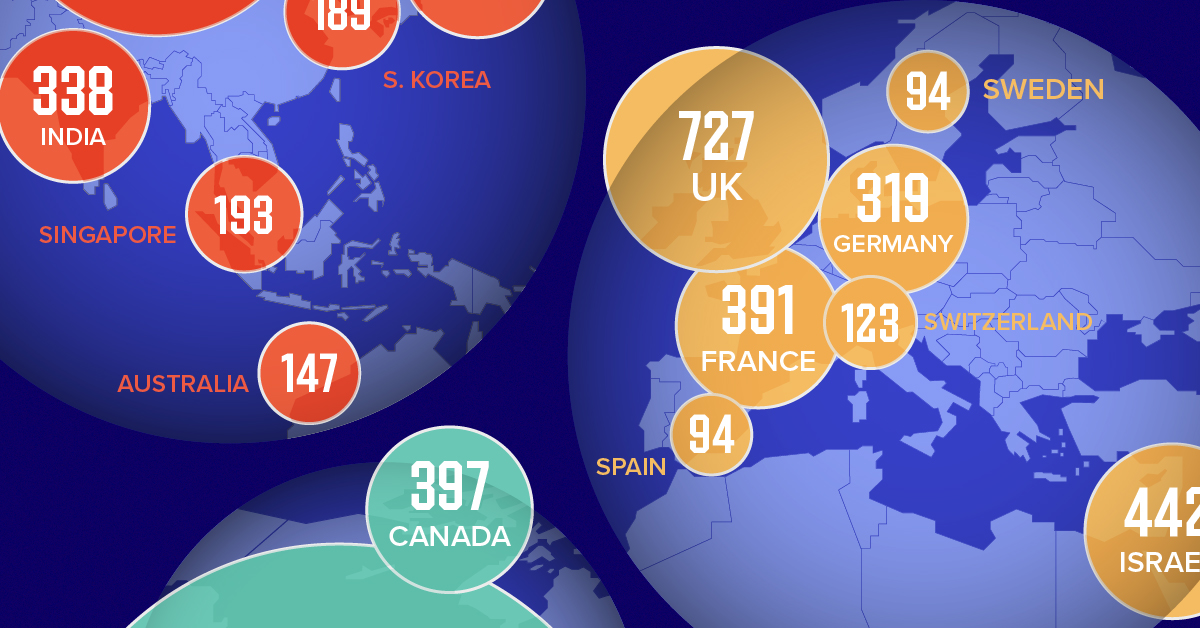

Mapped: The Number of AI Startups By Country

Over the past decade, thousands of AI startups have been funded worldwide. See which countries are leading the charge in this map graphic.

Mapped: The Number of AI Startups By Country

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

Amidst the recent expansion of artificial intelligence (AI), we’ve visualized data from Quid (accessed via Stanford’s 2024 AI Index Report) to highlight the top 15 countries which have seen the most AI startup activity over the past decade.

The figures in this graphic represent the number of newly funded AI startups within that country, in the time period of 2013 to 2023. Only companies that received over $1.5 million in private investment were considered.

Data and Highlights

The following table lists all of the numbers featured in the above graphic.

| Rank | Geographic area | Number of newly funded AI startups (2013-2023) |

|---|---|---|

| 1 | 🇺🇸 United States | 5,509 |

| 2 | 🇨🇳 China | 1,446 |

| 3 | 🇬🇧 United Kingdom | 727 |

| 4 | 🇮🇱 Israel | 442 |

| 5 | 🇨🇦 Canada | 397 |

| 6 | 🇫🇷 France | 391 |

| 7 | 🇮🇳 India | 338 |

| 8 | 🇯🇵 Japan | 333 |

| 9 | 🇩🇪 Germany | 319 |

| 10 | 🇸🇬 Singapore | 193 |

| 11 | 🇰🇷 South Korea | 189 |

| 12 | 🇦🇺 Australia | 147 |

| 13 | 🇨🇭 Switzerland | 123 |

| 14 | 🇸🇪 Sweden | 94 |

| 15 | 🇪🇸 Spain | 94 |

From this data, we can see that the U.S., China, and UK have established themselves as major hotbeds for AI innovation.

In terms of funding, the U.S. is massively ahead, with private AI investment totaling $335 billion between 2013 to 2023. AI startups in China raised $104 billion over the same timeframe, while those in the UK raised $22 billion.

Further analysis reveals that the U.S. is widening this gap even more. In 2023, for example, private investment in the U.S. grew by 22% from 2022 levels. Meanwhile, investment fell in China (-44%) and the UK (-14.1%) over the same time span.

Where is All This Money Flowing To?

Quid also breaks down total private AI investment by focus area, providing insight into which sectors are receiving the most funding.

| Focus Area | Global Investment in 2023 (USD billions) |

|---|---|

| 🤖 AI infrastructure, research, and governance | $18.3 |

| 🗣️ Natural language processing | $8.1 |

| 📊 Data management | $5.5 |

| ⚕️ Healthcare | $4.2 |

| 🚗 Autonomous vehicles | $2.7 |

| 💰 Fintech | $2.1 |

| ⚛️ Quantum computing | $2.0 |

| 🔌 Semiconductor | $1.7 |

| ⚡ Energy, oil, and gas | $1.5 |

| 🎨 Creative content | $1.3 |

| 📚 Education | $1.2 |

| 📈 Marketing | $1.1 |

| 🛸 Drones | $1.0 |

| 🔒 Cybersecurity | $0.9 |

| 🏭 Manufacturing | $0.9 |

| 🛒 Retail | $0.7 |

| 🕶️ AR/VR | $0.7 |

| 🛡️ Insurtech | $0.6 |

| 🎬 Entertainment | $0.5 |

| 💼 VC | $0.5 |

| 🌾 Agritech | $0.5 |

| ⚖️ Legal tech | $0.4 |

| 👤 Facial recognition | $0.3 |

| 🌐 Geospatial | $0.2 |

| 💪 Fitness and wellness | $0.2 |

Attracting the most money is AI infrastructure, research, and governance, which refers to startups that are building AI applications (like OpenAI’s ChatGPT).

The second biggest focus area is natural language processing (NLP), which is a type of AI that enables computers to understand and interpret human language. This technology has numerous use cases for businesses, particularly in financial services, where NLP can power customer support chatbots and automated wealth advisors.

With $8 billion invested into NLP-focused startups during 2023, investors appear keenly aware of this technology’s transformative potential.

Learn More About AI From Visual Capitalist

If you enjoyed this graphic, be sure to check out Visualizing AI Patents by Country.

-

Politics6 days ago

Politics6 days agoCharted: Trust in Government Institutions by G7 Countries

-

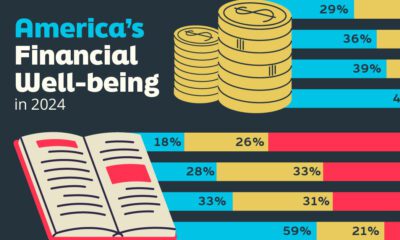

Money2 weeks ago

Money2 weeks agoCharted: Who Has Savings in This Economy?

-

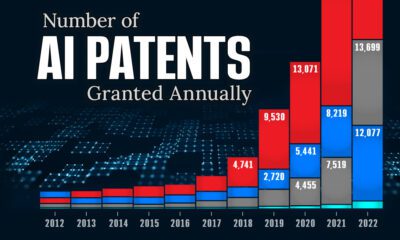

AI2 weeks ago

AI2 weeks agoVisualizing AI Patents by Country

-

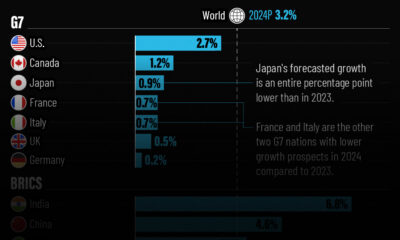

Markets2 weeks ago

Markets2 weeks agoEconomic Growth Forecasts for G7 and BRICS Countries in 2024

-

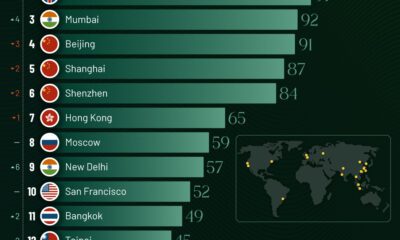

Wealth2 weeks ago

Wealth2 weeks agoCharted: Which City Has the Most Billionaires in 2024?

-

Technology1 week ago

Technology1 week agoAll of the Grants Given by the U.S. CHIPS Act

-

Green1 week ago

Green1 week agoThe Carbon Footprint of Major Travel Methods

-

United States1 week ago

United States1 week agoVisualizing the Most Common Pets in the U.S.