How AI and the Metaverse Will Impact the Datasphere

How AI and the Metaverse Will Impact the Datasphere

The datasphere—the infrastructure that stores and processes our data—is critical to many of the advanced technologies on which we rely.

So we partnered with HIVE Digital on this infographic to take a deep dive on how it could evolve to meet the twin challenges of AI and the metaverse.

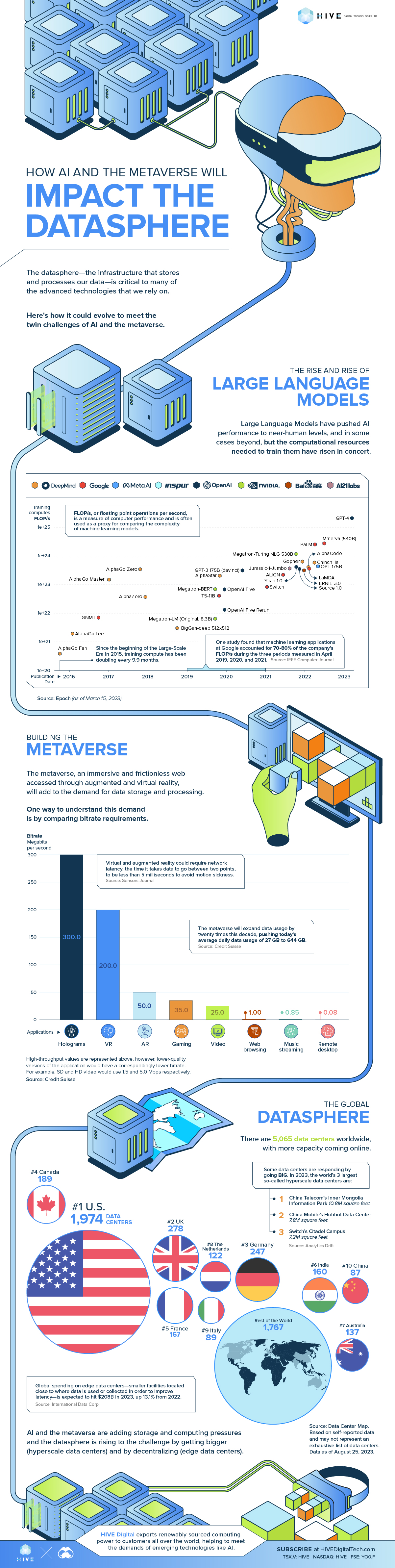

The Rise and Rise of Large Language Models

If the second decade of the 21st century is remembered for anything, it will probably be the leaps and bounds made in the field of AI. Large language models (LLMs) have pushed AI performance to near-human levels, and in some cases beyond. But to get there, it is taking more and more computational resources to train and operate them.

The Large-Scale Era is often considered to have started in late 2015 with the release of DeepMind’s AlphaGo Fan, the first computer to defeat a professional Go player.

| LLM System | Organization | Publication Date | Training Compute (FLOP/s) |

|---|---|---|---|

| AlphaGo Fan | DeepMind | 2015-Oct | 3.80E+20 |

| AlphaGo Lee | DeepMind | 2016-01-27 | 1.90E+21 |

| GNMT | 2016-09-26 | 6.90E+21 | |

| AlphaGo Master | DeepMind | 2017-01-01 | 1.50E+23 |

| AlphaGo Zero | DeepMind | 2017-10-18 | 3.41E+23 |

| Alpha Zero | DeepMind | 2017-12-05 | 3.67E+22 |

| BigGan-deep 512x512 | Heriot-Watt University, DeepMind | 2018-09-28 | 3.00E+21 |

| Megatron-BERT | NVIDIA | 2019-09-17 | 6.90E+22 |

| Megatron-LM (Original, 8.3B) | NVIDIA | 2019-Sep | 9.10E+21 |

| T5-11B | 2019-Oct | 4.05E+22 | |

| AlphaStar | DeepMind | 2019-10-30 | 2.02E+23 |

| OpenAI Five | OpenAI | 2019-12-13 | 6.70E+22 |

| OpenAI Five Rerun | OpenAI | 2019-12-13 | 1.30E+22 |

| GPT-3 175B (davinci) | OpenAI | 2020-May | 3.14E+23 |

| Switch | 2021-01-11 | 8.22E+22 | |

| ALIGN | 2021-06-11 | 2.15E+23 | |

| ERNIE 3.0 | Baidu Inc. | 2021-Jul | 2.35E+18 |

| Jurassic-1-Jumbo | AI21 Labs | 2021-Aug | 3.70E+23 |

| Megatron-Turing NLG 530B | Microsoft, NVIDIA | 2021-Oct | 1.17E+24 |

| Yuan 1.0 | Inspur | 2021-10-12 | 4.10E+23 |

| Source 1.0 | Inspur | 2021-11-10 | 3.54E+23 |

| Gopher | DeepMind | 2021-Dec | 6.31E+23 |

| AlphaCode | DeepMind | 2022-Feb | 4.05E+23 |

| LaMDA | 2022-02-10 | 3.55E+23 | |

| Chinchilla | DeepMind | 2022-Mar | 5.76E+23 |

| PaLM (540B) | 2022-Apr | 2.53E+24 | |

| OPT-175B | Meta AI | 2022-May | 4.30E+23 |

| Minerva (540B) | 2022-Jun | 2.74E+24 | |

| GPT-4 | OpenAI | 2023-Mar | 2.10E+25 |

That LLM required a training compute of 380 quintillion FLOP/s, or floating-point operations per second, a measure of computer performance. In 2023, OpenAI’s GPT-4 had a training compute 55 thousand times greater, at 21 septillion FLOP/s.

At this rate of growth—essentially doubling every 9.9 months—future AI systems will need exponentially larger computers to train and operate them.

Building the Metaverse

The metaverse, an immersive and frictionless web accessed through augmented and virtual reality (AR and VR), will only add to these demands. One way to quantify this demand is to compare bitrates across applications, which measures the amount of data (i.e. bits) transmitted.

On the low end: music streaming, web browsing, and gaming all have relatively low bitrate requirements. Only streaming gaming breaks the one Mbps (megabits per second) threshold. Things go up from there, and fast. AR, VR, and holograms, all technologies that will be integral for the metaverse, top out at 300 Mbps.

Consider also that VR and AR require incredibly low latency—less than five milliseconds—to avoid motion sickness. So not only will the metaverse contribute increase the amount of data that needs to be moved—644 GB per household per day—but it will also need to move it very quickly.

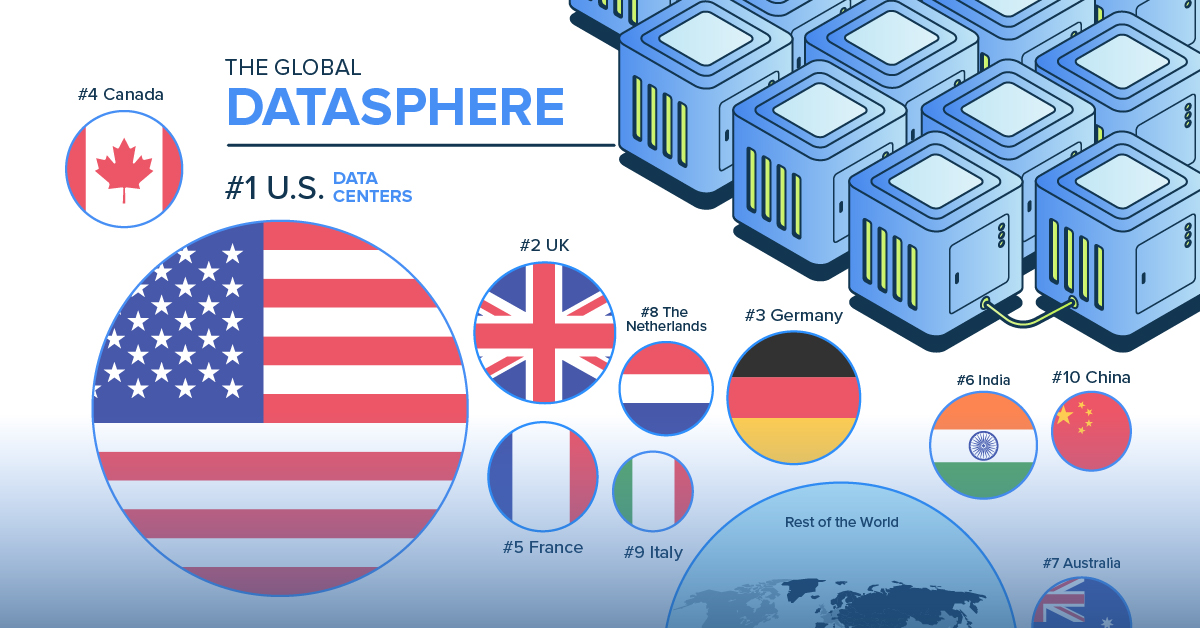

The Global Datasphere

At time of writing there are 5,065 data centers worldwide, with 39.0% located in the U.S. The next largest national player is the UK, with only 5.5%. Not only do they store the data we produce, but they also run the applications that we rely on. And they are evolving.

There are two broad approaches that data centers are taking to get ahead of the demand curve. The first and probably most obvious option is going BIG. The world’s three largest hyperscale data centers are:

- 🇨🇳 China Telecom’s Inner Mongolia Information Park (10.8 million square feet)

- 🇨🇳 China Mobile’s Hohhot Data Center (7.8 million square feet)

- 🇺🇸 Switch’s Citadel Campus (7.2 million square feet)

The other route is to go small, but closer to where the action is. And this is what edge computing does, decentralizing the data center in order to improve latency. This approach will likely play a big part in the rollout of self-driving vehicles, where safety depends on speed.

And investors are putting their money behind the idea. Global spending on edge data centers is expected to hit $208 billion in 2023, up 13.1% from 2022.

Welcome to the Zettabyte Era

The International Data Corporation projects that the amount of data produced annually will grow to 221 zettabytes by 2026, at a compound annual growth rate of 21.2%. With the zettabyte era nearly upon us, data centers will have a critical role to play.

Learn more about how HIVE Digital exports renewably sourced computing power to customers all over the world, helping to meet the demands of emerging technologies like AI.

-

Technology1 day ago

Technology1 day agoAll of the Grants Given by the U.S. CHIPS Act

Intel, TSMC, and more have received billions in subsidies from the U.S. CHIPS Act in 2024.

-

Technology3 days ago

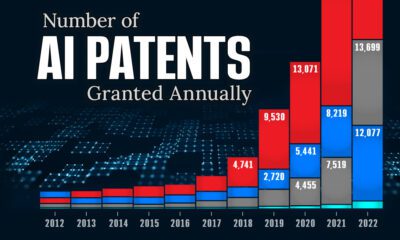

Technology3 days agoVisualizing AI Patents by Country

See which countries have been granted the most AI patents each year, from 2012 to 2022.

-

Brands5 days ago

Brands5 days agoHow Tech Logos Have Evolved Over Time

From complete overhauls to more subtle tweaks, these tech logos have had quite a journey. Featuring: Google, Apple, and more.

-

Technology3 weeks ago

Technology3 weeks agoRanked: Semiconductor Companies by Industry Revenue Share

Nvidia is coming for Intel’s crown. Samsung is losing ground. AI is transforming the space. We break down revenue for semiconductor companies.

-

AI3 weeks ago

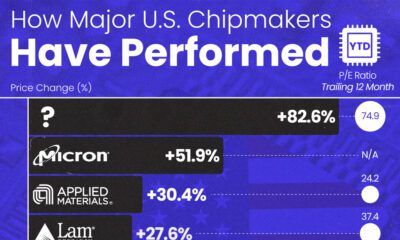

AI3 weeks agoThe Stock Performance of U.S. Chipmakers So Far in 2024

The Nvidia rocket ship is refusing to slow down, leading the pack of strong stock performance for most major U.S. chipmakers.

-

Technology3 weeks ago

Technology3 weeks agoRanked: The Most Popular Smartphone Brands in the U.S.

This graphic breaks down America’s most preferred smartphone brands, according to a December 2023 consumer survey.