Technology

Tap Into the Mobile Payments Revolution

Tap Into the Mobile Payments Revolution

The trends and contenders that are shaping mobile payments.

Thanks to Purefunds Mobile Payments ETF (IPAY) for helping us put this together.

Yesterday, user-friendly payment processor Stripe announced a strategic investment and partnership from Visa that values the company at $5 billion. Other investors that participated are not unknowns either: Kleiner Perkins Caufield Byers, American Express, and Sequoia. It was only in December that Stripe was valued at $3.5 billion and in their previous financing, they were valued at just half of that. Stripe will use Visa’s international connections to help it expand beyond the 20 countries it currently services.

This type of story is not unusual in the payment space. Companies are scrambling to scale or adopt new technologies that integrate mobile and electronic features to make it easier, cheaper, and faster for customers to pay. The reason for this is that the payments ecosystem has always been more cumbersome and more expensive than it should be. In the United States alone, retail merchants that accept card-based payments were charged about $67 billion in fees. Add the rest of the world to that pie, and it makes it clear that the payments space is as ripe for disruption as any other.

Mobile and electronic payments allow customers to pay for goods with a tap of a phone or the press of a button. Two of every three Americans have a smartphone, and mobile payments can typically happen faster with less fees. The earliest adopters of mobile payments have a younger and affluent profile: they average just over 30 years old, have a higher annual income, and spend over 2x more on retail than unwilling non-users of mobile payments.

Big Data and the Developing World

One of the most attractive benefits of mobile payments is the integration of big data and predictive analytics. Retailers will have the capability to link purchases directly with location (GPS), consumer behaviour, purchase history, demographics, and social influence. Analyzing this information will allow companies to reach out to consumers with tailored offerings, loyalty programs, and rewards. Customers will be able to take action right from their mobile device.

The opportunities in payments are not just limited to in the United States or even the developed world. Perhaps one of the most interesting opportunities for the mobile payments space is in Africa, where bank penetration is extremely low at only about 25% and mobile phone penetration is higher at 60%. Kenya is a good example of a market where digitization has reached a large portion of the population, giving mobile payments an 86% household penetration.

Mckinsey did an analysis looking at the size of revenue pools for mobile payments if each market in Africa had the same penetration as Kenya, and it sees the pools more than doubling in places like Ethiopia and Nigeria. With the population in sub-Saharan Africa expected to balloon from 926 million to 2.2 billion by 2050, their appears to be even greater opportunity.

Tapping In

The earliest potential in the mobile and electronic payments market appears to be in areas such as micropayments, incidental payments, recurring bills, peer-to-peer money transfers, and cryptocurrency. However, in the long term, the concept can be applied to many different facets of commerce.

Mobile payments may continue to disrupt the big payments market because of several factors including a young and growing userbase, ease of use, faster transactions, cheaper costs, and increased adoption. As Smittipon Srethapramote, who covers the North American payments industry for Morgan Stanley, concludes in a summary on the subject: “Mobile Payments can expand the global revenue pie from $175 billion to $250 billion, including $45 billion in developed markets and $30 billion in emerging markets.”

Brands

How Tech Logos Have Evolved Over Time

From complete overhauls to more subtle tweaks, these tech logos have had quite a journey. Featuring: Google, Apple, and more.

How Tech Logos Have Evolved Over Time

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

One would be hard-pressed to find a company that has never changed its logo. Granted, some brands—like Rolex, IBM, and Coca-Cola—tend to just have more minimalistic updates. But other companies undergo an entire identity change, thus necessitating a full overhaul.

In this graphic, we visualized the evolution of prominent tech companies’ logos over time. All of these brands ranked highly in a Q1 2024 YouGov study of America’s most famous tech brands. The logo changes are sourced from 1000logos.net.

How Many Times Has Google Changed Its Logo?

Google and Facebook share a 98% fame rating according to YouGov. But while Facebook’s rise was captured in The Social Network (2010), Google’s history tends to be a little less lionized in popular culture.

For example, Google was initially called “Backrub” because it analyzed “back links” to understand how important a website was. Since its founding, Google has undergone eight logo changes, finally settling on its current one in 2015.

| Company | Number of Logo Changes |

|---|---|

| 8 | |

| HP | 8 |

| Amazon | 6 |

| Microsoft | 6 |

| Samsung | 6 |

| Apple | 5* |

Note: *Includes color changes. Source: 1000Logos.net

Another fun origin story is Microsoft, which started off as Traf-O-Data, a traffic counter reading company that generated reports for traffic engineers. By 1975, the company was renamed. But it wasn’t until 2012 that Microsoft put the iconic Windows logo—still the most popular desktop operating system—alongside its name.

And then there’s Samsung, which started as a grocery trading store in 1938. Its pivot to electronics started in the 1970s with black and white television sets. For 55 years, the company kept some form of stars from its first logo, until 1993, when the iconic encircled blue Samsung logo debuted.

Finally, Apple’s first logo in 1976 featured Isaac Newton reading under a tree—moments before an apple fell on his head. Two years later, the iconic bitten apple logo would be designed at Steve Jobs’ behest, and it would take another two decades for it to go monochrome.

-

Travel1 week ago

Travel1 week agoAirline Incidents: How Do Boeing and Airbus Compare?

-

Markets3 weeks ago

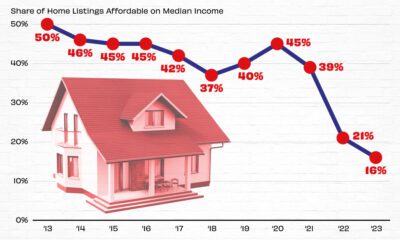

Markets3 weeks agoVisualizing America’s Shortage of Affordable Homes

-

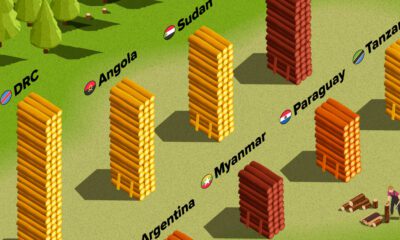

Green2 weeks ago

Green2 weeks agoRanked: Top Countries by Total Forest Loss Since 2001

-

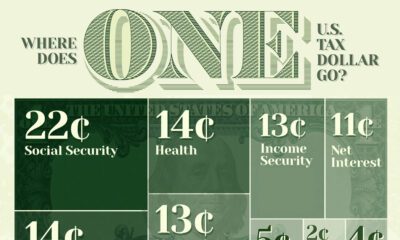

Money2 weeks ago

Money2 weeks agoWhere Does One U.S. Tax Dollar Go?

-

Misc2 weeks ago

Misc2 weeks agoAlmost Every EV Stock is Down After Q1 2024

-

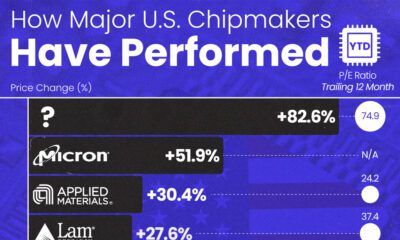

AI2 weeks ago

AI2 weeks agoThe Stock Performance of U.S. Chipmakers So Far in 2024

-

Markets2 weeks ago

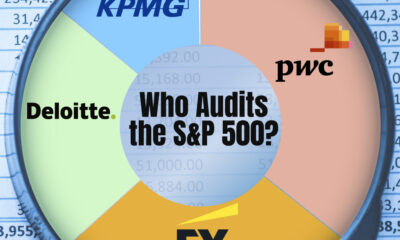

Markets2 weeks agoCharted: Big Four Market Share by S&P 500 Audits

-

Real Estate2 weeks ago

Real Estate2 weeks agoRanked: The Most Valuable Housing Markets in America