Technology

AIoT: When Artificial Intelligence Meets the Internet of Things

AIoT: When AI Meets the Internet of Things

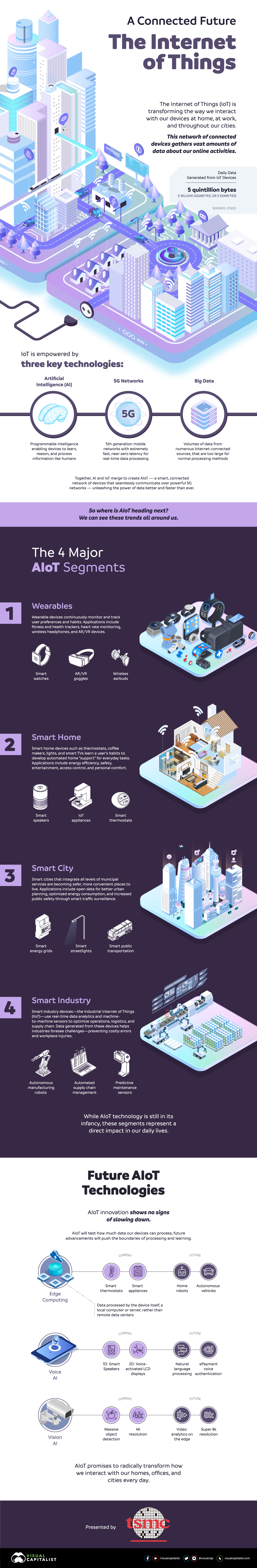

The Internet of Things (IoT) is a technology helping us to reimagine daily life, but artificial intelligence (AI) is the real driving force behind the IoT’s full potential.

From its most basic applications of tracking our fitness levels, to its wide-reaching potential across industries and urban planning, the growing partnership between AI and the IoT means that a smarter future could occur sooner than we think.

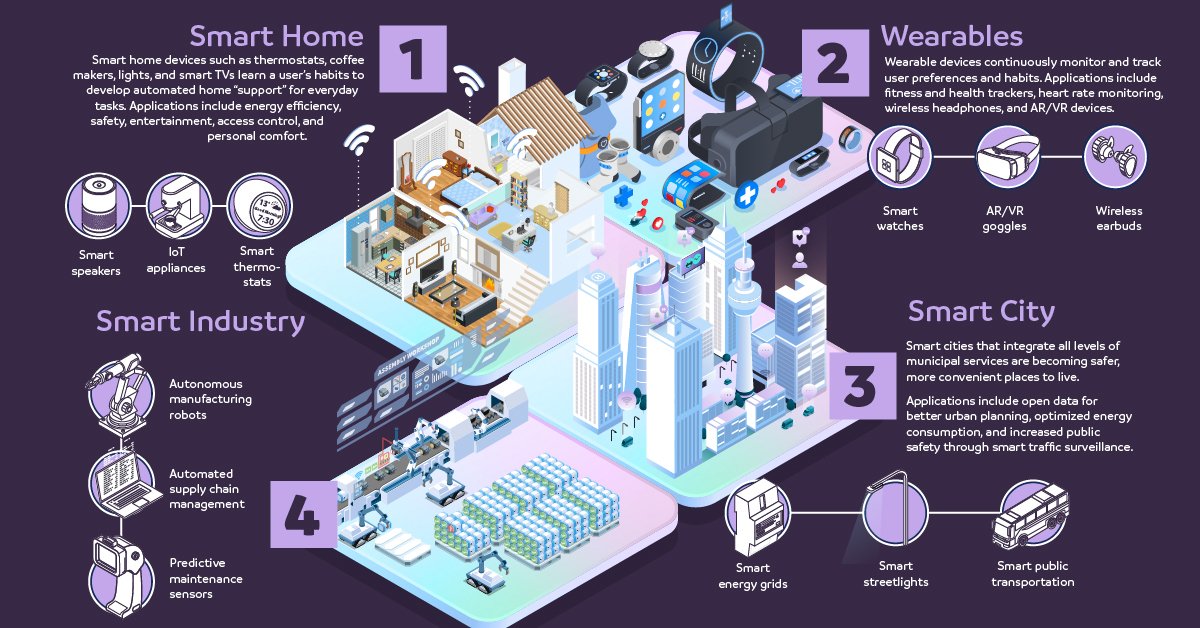

This infographic by TSMC highlights the breakthrough technologies and trends making that shift possible, and how we’re continuing to push the boundaries.

AI + IoT = Superpowers of Innovation

IoT devices use the internet to communicate, collect, and exchange information about our online activities. Every day, they generate 1 billion GB of data.

By 2025, there’s projected to be 42 billion IoT-connected devices globally. It’s only natural that as these device numbers grow, the swaths of data will too. That’s where AI steps in—lending its learning capabilities to the connectivity of the IoT.

The IoT is empowered by three key emerging technologies:

- Artificial Intelligence (AI)

Programmable functions and systems that enable devices to learn, reason, and process information like humans. - 5G Networks

Fifth generation mobile networks with high-speed, near-zero lag for real time data processing. - Big Data

Enormous volumes of data processed from numerous internet-connected sources.

Together, these interconnected devices are transforming the way we interact with our devices at home and at work, creating the AIoT (“Artificial Intelligence of Things”) in the process.

The Major AIoT Segments

So where are AI and the IoT headed together?

There are four major segments in which the AIoT is making an impact: wearables, smart home, smart city, and smart industry:

1. Wearables

Wearable devices such as smartwatches continuously monitor and track user preferences and habits. Not only has this led to impactful applications in the healthtech sector, it also works well for sports and fitness. According to leading tech research firm Gartner, the global wearable device market is estimated to see more than $87 billion in revenue by 2023.

2. Smart Home

Houses that respond to your every request are no longer restricted to science fiction. Smart homes are able to leverage appliances, lighting, electronic devices and more, learning a homeowner’s habits and developing automated “support.”

This seamless access also brings about additional perks of improved energy efficiency. As a result, the smart home market could see a compound annual growth rate (CAGR) of 25% between 2020-2025, to reach $246 billion.

3. Smart City

As more and more people flock from rural to urban areas, cities are evolving into safer, more convenient places to live. Smart city innovations are keeping pace, with investments going towards improving public safety, transport, and energy efficiency.

The practical applications of AI in traffic control are already becoming clear. In New Delhi, home to some of the world’s most traffic-congested roads, an Intelligent Transport Management System (ITMS) is in use to make ‘real time dynamic decisions on traffic flows’.

4. Smart Industry

Last but not least, industries from manufacturing to mining rely on digital transformation to become more efficient and reduce human error.

From real-time data analytics to supply-chain sensors, smart devices help prevent costly errors in industry. In fact, Gartner also estimates that over 80% of enterprise IoT projects will incorporate AI by 2022.

The Untapped Potential of AI & IoT

AIoT innovation is only accelerating, and promises to lead us into a more connected future.

| Category | Today | Tomorrow |

|---|---|---|

| Edge computing | Smart thermostats Smart appliances | Home robots Autonomous vehicles |

| Voice AI | Smart speakers | Natural language processing (NLP) ePayment voice authentication |

| Vision AI | Massive object detection | Video analytics on the edge Super 8K resolution |

The AIoT fusion is increasingly becoming more mainstream, as it continues to push the boundaries of data processing and intelligent learning for years to come.

Just like any company that blissfully ignored the Internet at the turn of the century, the ones that dismiss the Internet of Things risk getting left behind.

—Jared Newman, Technology Analyst

Brands

How Tech Logos Have Evolved Over Time

From complete overhauls to more subtle tweaks, these tech logos have had quite a journey. Featuring: Google, Apple, and more.

How Tech Logos Have Evolved Over Time

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

One would be hard-pressed to find a company that has never changed its logo. Granted, some brands—like Rolex, IBM, and Coca-Cola—tend to just have more minimalistic updates. But other companies undergo an entire identity change, thus necessitating a full overhaul.

In this graphic, we visualized the evolution of prominent tech companies’ logos over time. All of these brands ranked highly in a Q1 2024 YouGov study of America’s most famous tech brands. The logo changes are sourced from 1000logos.net.

How Many Times Has Google Changed Its Logo?

Google and Facebook share a 98% fame rating according to YouGov. But while Facebook’s rise was captured in The Social Network (2010), Google’s history tends to be a little less lionized in popular culture.

For example, Google was initially called “Backrub” because it analyzed “back links” to understand how important a website was. Since its founding, Google has undergone eight logo changes, finally settling on its current one in 2015.

| Company | Number of Logo Changes |

|---|---|

| 8 | |

| HP | 8 |

| Amazon | 6 |

| Microsoft | 6 |

| Samsung | 6 |

| Apple | 5* |

Note: *Includes color changes. Source: 1000Logos.net

Another fun origin story is Microsoft, which started off as Traf-O-Data, a traffic counter reading company that generated reports for traffic engineers. By 1975, the company was renamed. But it wasn’t until 2012 that Microsoft put the iconic Windows logo—still the most popular desktop operating system—alongside its name.

And then there’s Samsung, which started as a grocery trading store in 1938. Its pivot to electronics started in the 1970s with black and white television sets. For 55 years, the company kept some form of stars from its first logo, until 1993, when the iconic encircled blue Samsung logo debuted.

Finally, Apple’s first logo in 1976 featured Isaac Newton reading under a tree—moments before an apple fell on his head. Two years later, the iconic bitten apple logo would be designed at Steve Jobs’ behest, and it would take another two decades for it to go monochrome.

-

Travel1 week ago

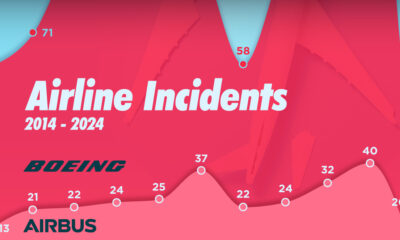

Travel1 week agoAirline Incidents: How Do Boeing and Airbus Compare?

-

Markets2 weeks ago

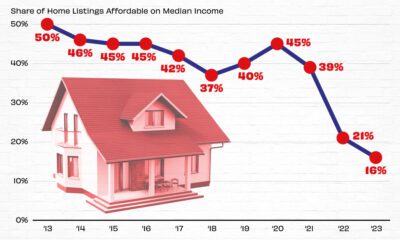

Markets2 weeks agoVisualizing America’s Shortage of Affordable Homes

-

Green2 weeks ago

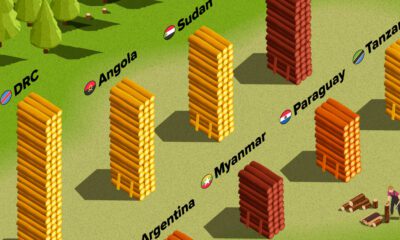

Green2 weeks agoRanked: Top Countries by Total Forest Loss Since 2001

-

Money2 weeks ago

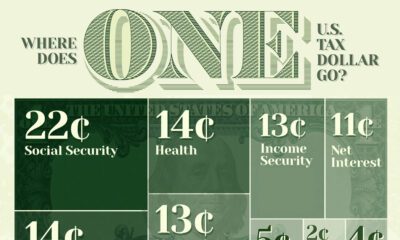

Money2 weeks agoWhere Does One U.S. Tax Dollar Go?

-

Misc2 weeks ago

Misc2 weeks agoAlmost Every EV Stock is Down After Q1 2024

-

AI2 weeks ago

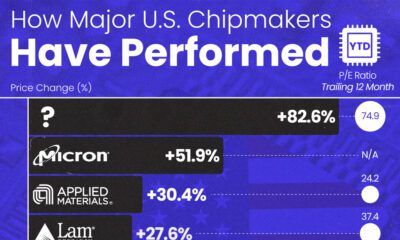

AI2 weeks agoThe Stock Performance of U.S. Chipmakers So Far in 2024

-

Markets2 weeks ago

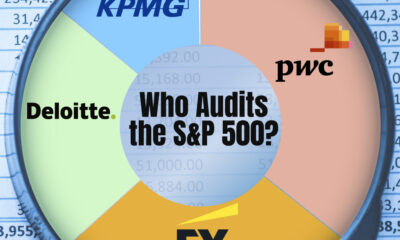

Markets2 weeks agoCharted: Big Four Market Share by S&P 500 Audits

-

Real Estate2 weeks ago

Real Estate2 weeks agoRanked: The Most Valuable Housing Markets in America